How do you decide what to wear when you head out for the day? Each of us has multiple options at our disposal, depending on what we see, hear, and feel while getting ready. If it looks like it’s about to rain, we probably pack our umbrellas. If the weather report predicts sunshine, we might take our sunglasses. We might even take both if we’re not sure. The information we have is insufficient to always be right, but our ability to combine different sources of information in seconds makes us mostly right most of the time.

Reservoir modeling is sometimes like this. We make the best decisions we can, but problems persist: a single model repeatedly fails once new data come about. Decisions must be made before we finish the model, and the models are used as a post-mortem. While the challenges associated with modeling are complex and span across multiple disciplines, a retrospective of the process is long overdue.

The Challenges

The process of creating a common framework through a model to combine different sources of data—from seismic to well logs and core samples to dynamic production data—is riddled with challenges and inefficiencies that become apparent even to the less-experienced eye.

For one, the entire process is typically centered around our domain-specific best technical guesses. This means that at each step in the modeling process, the domain experts must produce a most-likely scenario based on their analysis of their data before handing that data to the next domain expert to complete the next modeling step. The process of dissecting a large, multidisciplinary problem into smaller domain-specific problems is not specific to the oil and gas industry.

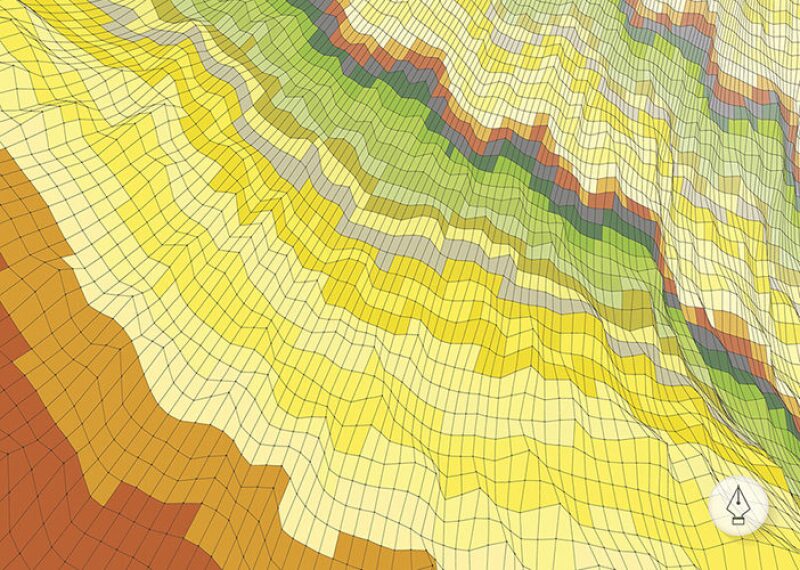

Typically, the geophysicist would first interpret seismic data to allow the geomodelers to generate a 3-D reservoir grid. The geologist would then determine potential geological concepts to incorporate with the petrophysicist’s well data interpretations in order to populate the grid with static properties. Finally, the baton would be passed to reservoir engineers tasked with delivering a history-matched model. In such a stepwise process, important information is sometimes lost, forgotten, or ignored at the individual stages.

Trying to tackle such tasks while stuck in a silo, without full consideration of all the steps necessary to forecast and design plans for developing the reservoir, will likely make us precisely wrong instead of approximately right.

In our search for answers as to how the reservoir looks and acts, there is merit to discovering and interpreting data. Still, the siloed and task-oriented approach is bound to miss the implicit dependencies that should exist among different modeling parameters. As an example, a challenge we often come across is how to pick the grid resolution in a model. It should, in theory, take into account the efficiency of creating and testing different hypotheses without sacrificing the ability to match general trends in the data, among other considerations. However, we often settle for a grid resolution that “feels right” to the geologists but misses the effects of the grid on the dynamic response until the reservoir engineers take on the model. The defects in the model become apparent after the decision is made, and they’re redressed with more resources, extended deadlines, and short-cuts. As a result, the team members often lose in trust in the model and in each other.

The lack of an integrated modeling workflow also causes missed opportunities and overlooked risks. For one, as discussed, we may focus too much on one aspect of the model without considering the ultimate goal of modeling. This is a challenge due to the tools available for modeling and the philosophy guiding our modeling culture. In terms of the tools we use, most are designed around specific domains to make better and higher-resolution “best guesses.” The step-wise siloed approach presented here is also a natural consequence of how we approach unique non-inverse problems.

At the end of the day we need to make a decision, and we will be the ones held responsible for that decision. We need to accept the fact that the models we create are imperfect, due in part to:

- The lack of dependencies captured between model parameters in different domains

- The fact that we cannot model everything accurately and cannot predict and account for all the events that will happen in the future

- The fact that we don’t capture known uncertainties

With the current tools, the integration of uncertainties in a step-wise approach becomes a seemingly insurmountable goal when a deadline nears. We rarely see uncertainty as anything more than an adjustment to the base case. The repetition of the entire process, taking into account prior assumptions, is limited by time and unlikely to happen objectively due to individual biases formed from working with the data for a prolonged time.

Additionally, organizational changes may sap the team’s expertise, causing disruptions, delays, and potential missteps. An all-too-familiar example is the time spent trying to learn why a certain change was propagated in previous iterations of the model and not enough time integrating the learning in the new modeling effort. This dilemma further delays the modeling process and fosters demotivation in the subsurface teams (Sætrom, 2019).

Solution

With the tools currently available to us, reservoir modeling should be continuous, not a one-off effort. These tools should empower teams to robustly change and pivot the understanding of reservoirs when new data are introduced. A challenge with these tools is that they call for a paradigm shift in the way we approach the reservoir modeling problem.

However, for digitally-native young professionals, this is a perfect opportunity to help the industry make this transition and leverage the power of fully integrated modeling workflows. This warrants professionals to admit uncertainties, and carry them forward to where they ultimately play a vital role in describing realistic risk estimates attached to a decision.

In the specific example of reservoir modeling, the brunt of the work is often spent creating and history-matching the models. Solving an inverse problem with a non-unique solution in high dimensions using sparse, indirect measurements is becoming more automated with the introduction of Bayesian learning solutions such as ensemble-based methods.

These methods enable highly skilled professionals to avoid spending months tweaking and finding the "perfect set of parameters" to match observed data, and empower them by giving them time and insight to put their skills to best use. In addition, ensemble-based methods are not restricted by dimensionality; they allow for continuous discovery-driven reservoir modeling and learning while fields are in discovery or production.

The main value from this work, apart from the learning gained while conditioning the models, often lies in the forecasts made with the models. The forecast will likely have direct implications on reservoir development—on the lifetime of the field, the number of wells we drill, where we drill, and how we decide to operate. It is therefore important to build workflows that are robust and consistent enough to incorporate new data when they become available.

It is paramount to understand that solutions stem from more than technological improvements. The process itself calls for a revision. Nietzsche once said that “many are stubborn in pursuit of the path they have chosen, few in pursuit of the goal.” The traditional modeling workflows are built on years of experience, and it is far easier to follow the checklist set out at the outset of a project than to revise and question this checklist at each step. Most oil and gas organizations and their managerial structures are designed to accommodate this approach.

Likewise, a change from a deterministic mindset to a probabilistic one requires a fundamental change in the priorities of a modeling project. The team would no longer be concerned with the best technical guesses that the data interpretation provides, but instead with the range of possibilities, or the data’s possible minimum and maximum ranges, given the model. From experience, accounting for modeling error (such as a model’s coarseness or lack of parameters) is an uncomfortable feeling, as it likely requires users to think beyond the data and managers to accept uncertainty when they finally make a decision.

The process of changing from a case-centric decision-making process to an uncertainty-centric, or ensemble-based, approach should make life easier for the asset teams and decision makers. For example, the managers could estimate the likelihood of losing money in a project based on current assumptions. Therefore, they could make decisions based on preferred risk profiles and not on a single number incorrectly perceived as the expected or P50 value for that decision.

The Way Ahead

We as young professionals need to take a step back, assess all of the tools available to us, and question how these could be used to extract value. In addition, we must question whether the tools we’ve been equipped with should be the industry standard going forward. Embracing this set of tools and a goal-focused mindset will better equip us to take on reservoir modeling as well as decisions surrounding our daily routine.

Reference

Sætrom, Jon. The Seven Wastes in Reservoir Modelling Projects (and How to Overcome Them). Resoptima, September 12, 2019.