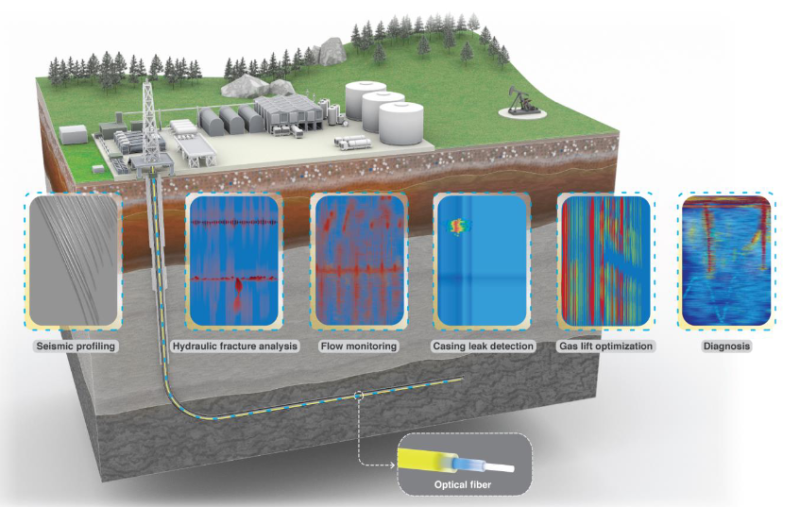

The use of fiber-optic distributed sensing, including acoustics and temperature, has become increasingly common in the oil and gas industry, as more research takes place to find ways to apply this technology and to improve results.

The use of fiber optics reveals great potential with applications such as production/injection flow allocation, integrity monitoring and leak detection, crossflow detection, seismic survey and analysis, hydraulic-fracturing surveillance, and diagnostics of pumps and valves.

However, the high volume and complexity of fiber-optics data presents unique challenges in terms of storage, processing, and analysis. The challenges must be addressed if the full potential of this data is to be realized. Fortunately, advancements in the Fourth Industrial Revolution (4IR) technologies can provide solutions to these issues.

This article explores the challenges associated with fiber-optics data analysis and how recent technology advances such as cloud computing, signal processing/data compression, artificial intelligence (AI)/machine learning (ML), and containerized software deployment can be leveraged to maximize the value of the data and provide solutions to a host of potentially important applications. We examine the advantages of these technologies in optimizing data analysis processes and discuss their potential for providing improved insights into fiber-optics data.

Fiber-Optics Data on the Cloud

Fiber-optics data, specifically distributed acoustic data (DAS), poses management and analysis challenges due to its extended spatial coverage, dense sampling nature, and hence, high data storage requirements.

Cloud computing offers several advantages for securely transmitting and analyzing large amounts of fiber-optics data. It provides a flexible and scalable storage system that can be accessed from anywhere at any time, while also offering enhanced data security and backup options to ensure the highest levels of data protection. Leveraging signal processing, ML algorithms, and AI models allow efficient processing, analysis, and interpretation of the data, generating valuable insights for better decision-making in the targeted application context. Additionally, cloud computing significantly reduces costs associated with data storage and processing, eliminating the need for expensive hardware, software, and IT personnel.

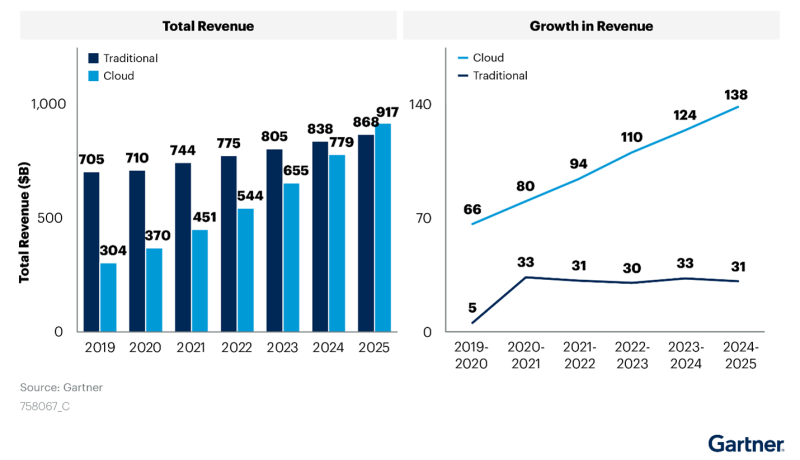

The oil and gas industry is gradually embracing fiber-optics data to improve data-driven decision-making, making cloud computing an ideal option for high-speed data transfer and storage.

Handling Big Data (Compression)

A typical DAS system may record samples from optical fibers of tens of kilometers in length, with high spatial (e.g., 1 m) and temporal (e.g., 10 kHz) resolution. The acquisition generates a raw data set at the order of 100 Mbps, and lasts for a long period, hours or days in continuous monitoring applications. All of these translate to huge data size at terabyte scale or data-streaming rate at Gbit/s. The size of the data requires significant amounts of computational, network bandwidth and storage space to process, distribute, store, and back up the data. In addition, some DAS deployments are in remote fields, which poses more challenges with limited computation resources and network connectivity.

To address the requirements for tremendous data storage and network bandwidth, a common practice is to compress the data before distribution, and therefore reduce field visits and optimize data transmission and storage.

Data compression methods involve two phases: compressing and encoding, and decompressing and decoding. The algorithms transform input data into a smaller representation during the compressing phase, while the decompressing phase reverses this transformation to recover the original data.

Lossless compression perfectly recovers the original data without any loss of information, but achieving a high compression ratio is more difficult. Lossy compression recovers an approximation of the original data and generally has a better compression ratio but at potentially degraded signal/noise ratio (SNR).

Compression methods can be combined for specific applications. ML-based compression methods have recently gained interest for their ability to build a representative model of the data and achieve higher compression ratios without a severe degree of loss or SNR degradation.

5 Popular Compression Methods

1. Huffman algorithm—a lossless compression method that encodes symbols or characters by sorting their frequencies

2. Run-length encoding (RLE) algorithm—a lossless compression method that encodes a series of data with the count of consequently repeated occurrences

3. Lempel Ziv Welch (LZW) algorithm—a lossless compression method that encodes the repeated occurrences of words by a dictionary built from the data

4. Quantization algorithm—a lossy compression method that quantizes the input values in a shorter representation with reduced resolution or dynamic range

5. Transform coding algorithms—a class of lossy compression methods (e.g., DCT [discrete cosine transform], wavelet, and JPEG), that transform the data into proper domain and then apply quantization

Containerization

What is containerization? Why is it relevant?

Containerization is a popular approach to software development that involves packaging an application and its dependencies into reusable, isolated units called containers. This approach has become increasingly important for large-scale and process-heavy solutions, with benefits that include continuous deployment and scaling, risk mitigation, and standardization. Research by Forrester has shown that containerization helps teams deploy software 2.4 times more frequently and 10 times faster. Containerization can streamline the packaging, deployment, and maintenance of software, making it easier and more efficient to deploy new software versions. It can also help with scalability issues that come with increased traffic or usage by allowing software to run on different servers without interference.

Another crucial benefit of containerization is risk mitigation. Containerization creates isolated environments that ensure complete separation between different runtime environments, reducing the risk of conflicts or failures. In situations where failures occur, containers can be quickly shut down, and the system can automatically spin up a new container.

Standardization is also a significant advantage of containerization. By creating a standard development environment, containerization ensures that all applications are built and deployed in the same way, regardless of where or by whom. This eliminates the need for development teams to spend time on environment setup and ensures that applications will behave as expected in production.

Cloud native technologies, including containers, service meshes, microservices, immutable infrastructure, and declarative APIs (application programming interfaces), enable loosely coupled systems that are resilient, manageable, and observable. These technologies, combined with robust automation, allow engineers to make high-impact changes frequently and predictably with minimal toil.

However, just 30% of the respondents' organizations have adopted cloud native approaches across nearly all development and deployment activities, according to the CNCF and Linux Foundation Research survey. This indicates a significant opportunity for organizations to leverage the power of cloud native technologies such as containerization to improve their software development processes. With containers now mainstream, the uptake of serverless architecture in 2022 is setting the stage for new advancements in software development.

Containerization continues to mature as a technology with advancements in areas such as security, orchestration, and tooling, making it more viable for even the most-complex environments. Overall, containerization has become a critical technology for achieving faster and more-efficient software-development delivery, with its benefits in deployment and scalability, risk reduction, and standardization making it ideal for process-heavy solutions.

AI/ML Applications

DAS acquisition generates large data volumes with varying SNR, which is challenging to process with traditional approaches. To address this issue, signal processing, AI, and ML techniques supported by cloud-based, on-premise computing can be utilized to achieve more-efficient processing and analysis, and more-accurate prediction and interpretation.

AI/ML techniques have advanced rapidly and are widely used across industries to improve processing efficiency and accuracy, as well as to learn hidden patterns within big data. The popular deep-learning technique models nonlinear correlations between input features and output attributes effectively without the need for domain knowledge or human-crafted features. However, AI/ML workflows require large amounts of high-quality data and massive computing and storage resources.

Cloud computing provides a viable solution by allowing for exceptional amounts of data to be fed to AI/ML modeling while enabling easy scaling of computing resources. This makes cloud-based DAS systems a good fit for AI/ML workflows.

Pioneers in both academia and industry have identified a broad spectrum of use cases in AI/ML applications on DAS in the oil and gas industry, from exploration, drilling, to production.

- Convert DAS to geophone data for monitoring CO2 sequestration. Monitoring CO2 sequestration is critical in carbon capture and storage. DAS acquisition can provide better spatial coverage with finer sampling than conventional geophone system. ML-based imaging from DAS data can be a viable seismic acquisition tool for CO2 monitoring.

- Automated fracture location with DAS. Hydraulic-fracturing processes are important in drilling and production to extract petroleum or gas from deep reservoirs. The DAS system with long coverage has the advantage of monitoring the entire well with high spatial resolution. The DL-based approaches have been explored for locating fracture hits, to improve the detection accuracy.

- Pipeline intrusion/corrosion detection and protection. Networks of pipelines are critical for the transportation and distribution of hydrocarbon fuels. Thus, it is important to detect and protect pipelines from intrusion and corrosion. The DAS system provides a great scheme with high resolution and continuous-monitoring capability. The integration of ML algorithms improves the safety and enables preventive maintenance of the pipeline infrastructure.

- DAS for multiphase flow metering. Accurate flow-rate estimations from oil/water/gas multiphase flow are critical for optimizing the production performance and enhancing oil recovery. With the rich spectrum and long coverage, DAS systems provide an effective platform to continuously monitor the attributes such as temperature and pressure in production flow. The ML-based virtual flow-metering technology can be employed to model and predict the multiphase flow rates in an accurate and robust way.

What’s Next?

In conclusion, the combination of fiber-optics data and 4IR technologies—such as cloud computing, AI, ML, and containerization—presents a powerful solution for unlocking the full potential of well-sensing applications. By leveraging these technologies, the oil and gas industry can efficiently store, analyze, and process large volumes of fiber-optics data, generating valuable insights for better decision-making.

Cloud computing offers a cost-effective and scalable solution for securely transmitting and analyzing fiber-optics data from anywhere, at any time, while also providing enhanced data security and backup options. AI and ML algorithms can efficiently process fiber-optics data, providing valuable insights that can enhance the efficiency, accuracy, and safety of drilling and production operations. Containerization provides a solution for faster and more-efficient software development delivery, making it ideal for process-heavy solutions. When all are combined, fiber-optic distributed sensing has a significant potential for providing novel sensing and technology solutions to many applications that can transform the way the oil and gas industry operates.

FOR FURTHER READING

CNCF Annual Survey 2022 by Cloud Native Computing Foundation.

The Total Economic Impact™ of Red Hat OpenShift Cloud Services by Forrester Consulting (2022).

Gartner: Accelerated Move to Public Cloud to Overtake Traditional IT Spending in 2025 by A. Weissberger, IEEE Communication Society Technology Blog.

Li, W. Lu, H., Jin, Y. and Hveding F. (2021, July), Deep Learning for Quantitative Hydraulic Fracture Profiling from Fiber Optic Measurements, the SPE/AAPG/SEG Unconventional Resources Technology Conference, Houston, Texas, USA, July 2021.

Ma, Y. and Li, W. Comparing DAS and geophone data and imaging for monitoring CO2 sequestration, Second International Meeting for Applied Geoscience & Energy, 2022

Alzamil, N., Li, W., Zhou, H. and Merry, H. Frequency‐dependent signal‐to‐noise ratio effect of distributed acoustic sensing vertical seismic profile acquisition, Geophysical prospecting, Volume 70, Issue 2, February 2022, Pages 377-387

Merry, H., Li,W. Deffenbaugh, M. and Bakulin A.. Optimizing distributed acoustic sensing (DAS) acquisition: Test well design and automated data analysis, SEG extended abstract, 2020