This article presents a workflow of field development plans (FDPs) tailored for petroleum undergraduate students and early career professionals. Part 1 of this series focuses on the disciplines of geology and geophysics (G&G), petrophysics, and reservoir engineering. The article reflects real-world field examples from Malaysia and the author's experiences in training undergraduate students in Malaysian universities.

Introduction

FDP refers to the process of assessing an oil or gas field's development based on technical feasibility and economic viability while considering safety. This work takes place after the exploration stage, where seismic data is acquired and exploration wells are drilled to obtain adequate data on the field. FDP is a collaborative process involving experts from various disciplines who come together to analyze data, share insights, and strategize the most efficient methods for hydrocarbon extraction.

FDP in the real world, not as an undergraduate course, typically spans an estimated period from 5 to 10 years (post exploration), depending on the complexity, before transitioning to the production stage in oilfield cases. FDP is necessary because the course is an amalgamation of all the courses under petroleum engineering. For a graduate who is about to embark on a career, having a solid foundation in the application of petroleum engineering will be helpful.

Geology and Geophysics

Although G&G has a more significant role in the exploration stage, the importance of the discipline continues until the drilling stage, where a wellsite geologist will be stationed on the drilling rig to ensure that the target depth has been reached. The commencement of FDP is always with G&G, where important elements such as the petroleum system, depositional environment, and regional geology are laid out.

Another approach to perform petroleum system analysis is by literature study, which is a more common approach than relying on petrophysical analysis. The literature approach is conducted by referring to the geological report of the basin containing the reservoir, which unveils much information about the basin formation, age of the reservoir, tectonic activities, and depositional environment.

Apart from theoretical analysis, the next crucial segment is static modeling. Two approaches to static modeling are zone formation based on well-to-well correlation (if no contour map exists) or digitization of the contour map. During the construction of the map in the preprocessor, fault zonation also will take place.

The creation of a fault line will result in segmentation of the model into two horsts and grabens. The most important element needed during fault zonation is the transmissibility multiplier, which will determine whether the faults are sealing or leaking.

Thereafter, the model is discretized in the x- and y-directions and then layered. The gridding in x- and y-direction is rather circumstantial, depending on how significant the properties vary laterally. Under static modeling, however, the geomodeler usually tries to make the model as accurate as possible, thus creating a larger number of gridblocks.

Petrophysics

The next discipline after G&G is petrophysics, which is essentially understanding and populating the well logging data in the model. Petrophysics is important for in-place determination because it estimates key parameters such as net to gross (NTG), total porosity, effective porosity, and water saturation.

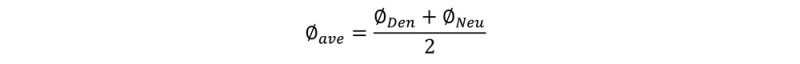

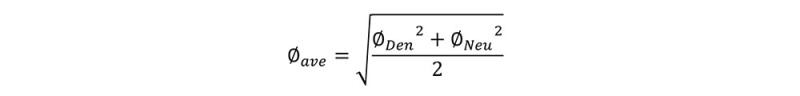

For NTG computation, a simple deduction is based on the volume of shale or on a more complex method using hydrocarbon column cutoff with the elbow method. Total porosity is typically estimated by averaging the readings from three logs—sonic, neutron, and density. The averaging of neutron and density porosity is also dependent on the state of matter of the hydrocarbon. State of matter here refers to liquid and vapor (i.e., oil or gas). The equations are shown in Eq. 1 and 2.

Sonic plays a more significant role in the identification of formation strength. Total porosity is insufficient, analogous to having money in the bank that you cannot withdraw; thus, effective porosity must be computed by eliminating the volume of shale and calibrated with core data.

Water saturation is computed based on the lateral log deep (if no oil-based mud is used during drilling). In the case of clastic sedimentary rock, shaly sand is common, thus requiring correlation such as with Simandoux equations to compute the Sw. A prudent practice is to compute using Archie’s Law as well to establish a base case. This analysis is more of a quick-look method, without in-depth quality check work, such as the borehole or other environmental impact.

Reservoir Engineering

The next discipline is reservoir engineering, which, in the authors’ view, is the lead of the entire FDP project. This section covers mostly dynamic modeling.

Dynamic modeling is also known as reservoir simulation. In FDP, reservoir simulation is an important step that determines many of the technical and economic feasibility. The first step in reservoir simulation is gridding. Gridding is already captured under the G&G section, but, to proceed further with the dynamic modeling, the gridding done earlier by the geomodeler must undergo upscaling to optimize accuracy and running time.

Before upscaling, rock properties must be distributed. Rock properties are mainly obtained from routine core analysis (RCA) data. For the RCA data, the primary task is to establish the porosity/permeability relationship. The first process is to compute the rock quality index and, subsequently, the flow zone indicator, from which the number of rock types can be obtained.

Cores under the same classification are grouped based on rock type, and an equation of porosity/permeability is obtained for each classification, concluding the rock typing. This rock typing is pertinent in permeability computation using effective porosity derived from the wireline log data, which will be distributed in a reservoir model via log upscaling.

After the RCA data comes the special core analysis laboratory (SCAL) data, which consists of relative permeability and capillary pressure. For more than one rock type, normalization, averaging, and denormalization must take place, particularly for capillary pressure (Pc), which requires transformation using the Leverett-J function. Reservoir simulation requires SCAL data to define part of the multiphase mobility in a reservoir.

After SCAL, the next input to the simulation is pressure, volume, and temperature (PVT) data. PVT analysis is dependent on the type of reservoir fluid. For black oil, wet gas, and dry gas, the black oil model will be selected. For volatiles and condensates, the compositional model will be selected. Selection of the reservoir model type has a significant effect on PVT analysis; the black oil model uses laboratory data, and the compositional model uses equation of state.

The last part in the reservoir simulation step is initialization, whereby using the neutron-density (butterfly/football) effect, resistivity log, and pressure vs. depth data, the initial pressure in a reservoir can be defined. Some peripheral tasks in the simulator include aquifer modeling, where the underlying aquifer is modeled using equations such as Schilthuis, Van Everdingen-Hurst, or Fetkovich. The aquifer model is chosen based on the strength of the aquifer and the transmissibility between the aquifer and the overlying reservoir.

The right approach to aquifer modeling is further validated in the brownfield stage, which is also known as history matching. History matching refers to a brownfield that requires the production and pressure data to be matched. History matching begins with a sensitivity analysis and is followed by an uncertainty analysis, where the weight of modification is assigned to the most sensitive parameters.