Oil and gas is in the midst of a pervasive digital transformation in which the industry is changing the way it manages assets, the way it interacts with customers, and the way it develops internal workflows. Perhaps one of the most significant impacts of this transformation, however, is the way in which companies characterize their subsurface data. Data volumes are growing at an exponential rate, and to handle these volumes, operators are looking at high-performance computing (HPC) solutions.

“The oil and gas supply today is still delivered through very large engineering projects,” said Detlef Hohl, chief scientist of computation and data science at Shell. “You have things like the deepwater Gulf of Mexico, things like our Prelude FLNG. These are huge projects. These projects are designed to be operated and maintained with the help of high-performance computing.”

Speaking at the Rice Oil and Gas HPC Conference, Hohl described seismic data as the dominant challenge and opportunity for industry. Moving data is a substantial challenge on all levels of an operation, requiring multiple entities, significant time investment, and a ton of computing power.

Hohl said this computer power, along with adaptive algorithms and more data, will drive future developments in machine learning and artificial intelligence. HPC is well suited to meet the industry’s needs, as decreased costs in processing power and bandwidth generated by larger processors may help operators manage rapidly increasing data volumes and rates in a timely, cost-effective manner.

Chevron and the Cloud

As cloud computing has matured in recent years, the HPC community has looked into the capabilities of various cloud architectures and whether they can produce additional value in data management. Hohl said this is an ongoing process.

“High-performance computing as a service is emerging, but it requires some work,” Hohl said. “We need to adapt our software and our ways of working to work in the commercial cloud. The commercial cloud providers need to work on adapting to our applications.”

Also speaking at the Rice HPC conference, Ling Zhuo shared Chevron’s experiences in migrating a highly parallelized seismic imaging application into external public cloud storage.

“In the past several years, there has been a greater cloud presence in the industry, especially in the enterprise IT domain, and so our question is whether the cloud is mature enough for HPC applications. Can we move an existing HPC seismic application to the cloud? That’s the main project for us,” Zhuo said.

Chevron’s goals with the migration project were to increase performance speed for its data processing without significant user workflow disruption, and to take as little time as possible in moving the data. During the first phase of project design, Chevron engineers had to make several infrastructure-based choices such as how to build a virtual network in the cloud and selecting a high-performance data storage method.

When the application successfully ran in the cloud, Zhou said they needed to revisit these design decisions for a couple of reasons. For one, the performances of the central processing units (CPUs), network, and cloud storage were different from those in the on-premise clusters. Also, large amounts of computing resources became available in the cloud.

Zhuo also said companies need to know their applications well if they are to run HPC for this purpose. They should know the input/output (IO) requirements and the computing resources it will need to make decisions. Even so, the increase in performance speed after cloud migration may be limited because the application was written for a different HPC application.

“If we move up to the cloud, we have to rethink the architecture of the application and rethink some of our assumptions,” Zhuo said.

Seismic Imaging and Digital Rock

Shell supports 45 HPC applications globally, doing a large amount of the processing and production workload in-house. Hohl said 75% of its HPC work is in seismic imaging, with the remaining 25% spread out between digital rock modeling, reservoir modeling, and chemistry and analysis. The company’s primary HPC infrastructure is based on Intel processors, notably the Xeon 5600 series.

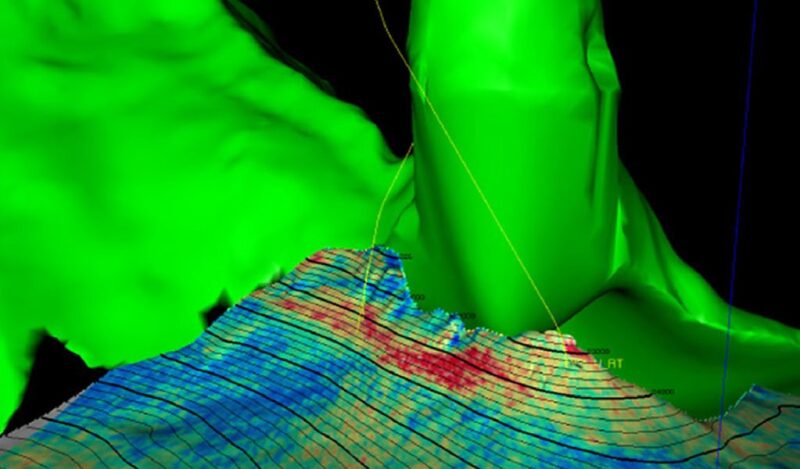

For its seismic imaging, Shell utilizes large-memory, high-end computer nodes with large-scale IO capabilities. It was one of the first companies to develop its own interpretation and visualization system—since its development nearly a decade ago, the company’s GeoSigns subsurface interpretation workspace has helped it pursue more complex projects in difficult environments. Hohl said that while Shell’s platform in this area has evolved over the past few decades, the hardware it uses has been fairly consistent since the turn of the 21st century. He said this may change in the near future, though.

“We’ve had an exceptional period of stability architecture-wise with commodity LINUX clusters, mostly based on Intel architecture,” he said. “I believe this period of stability seems to be coming to an end. We now have more choices for HPC architectures than we’ve had in the last few decades, and this is an exciting development.”

Digital rock analysis can be valuable in measuring relative permeability and end-scale saturation at a faster rate. Models generate 3D digital images based on small 2D rock images generated by micro-CT (computed tomography) scans. Hohl said digital rock has a driver different from seismic imaging—while the latter is driven by data quantity, he called the former “a quest to replace time-consuming and expensive experiments with computer simulations”—but the simulations generated from digital rock modeling can be just as demanding. HPC, Hohl said, will play an important part in advancing these modeling systems.

The Rice Oil and Gas HPC Conference was hosted by the Ken Kennedy Institute for Information Technology at Rice University.