Selling new offshore seismic survey methods can be reduced to two goals: “We want more of everything at less cost.”

That maxim was offered by Andrew Long, chief scientist of geoscience and engineering, imaging, and engineering for Petroleum Geo-Services, one of the players in this intensely competitive, economically depressed, technology-driven business.

While the science behind using sound waves to image underground formations is baffling to outsiders, the two avenues for doing it better are not. There are improvements in data acquisition covering sound sources, receivers, and survey methods. And there is the mathematics and computer power needed to process and extract more information from the constantly growing amount of data gathered.

“Today, what is driving things is progress in acquisition,” said Christopher Liner, president of the Society of Exploration Geophysicists (SEG). The University of Arkansas geophysics professor said that is significant because “the really big advances in geophysics are always with acquisition.”

That upbeat assessment of the future of seismic was offered late last year as oil prices were on their way to USD 50/bbl. Prices have remained low, leading to reductions in exploration budgets so deep that even deep discounts are attracting little work.

“The market is really depressed right now,” said David Monk, worldwide director of geophysics for Apache Corp. Seismic service companies are trying to limit the number of boats idled and workers laid off by offering services at discounts from 30% to 50%. Bargain rates are drawing little interest because “money is short on the demand side as well,” he said.

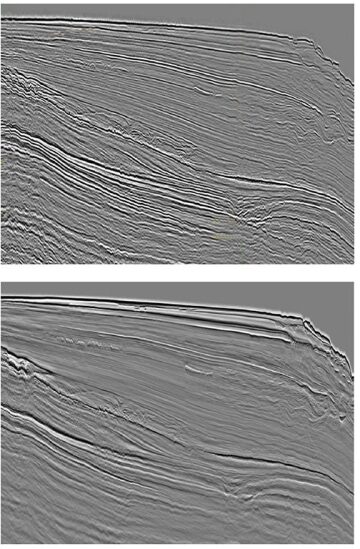

New data acquisition methods, though, are coming into use because they promise better imaging and, in some cases, significantly reduce the cost to do the work. And as the average offshore discovery gets smaller better quality imaging has become paramount.

“As you go to maturing fields and new opportunities out there, some of those reservoirs are quite complex and quite thin compared to what we could typically see in seismic,” said John Etgen, a distinguished advisor for seismic imaging for BP. “We need to improve our game in getting better resolution in seismic.”

Better Ways

Three offshore data acquisition trends were highlighted by Liner at the 2014 International Petroleum Technology Conference in Kuala Lumpur:

- Wide-azimuth surveys, which opened up exploration and development beneath thick salt layers by examining reservoirs from a variety of directions and angles, are evolving to address different challenges.

- Simultaneous sound sources are used to gather more data faster by continuously recording sounds generated by multiple sound sources that are steadily working without any effort to avoid overlapping shots. The sounds are then separated as if each came in separately.

- Multisensor streamers are used to detect the direction of incoming seismic signals. By better sorting signals it is possible to gather more of the data needed to create clearer images, and could offer new opportunities, such as filling in the gaps in between widely spaced streamers.

Among the three, wide azimuth is the most mature technology. It was first used a decade ago by BP, which needed a way to image reservoirs obscured by thick salt layers, and was quickly embraced by other companies as a way to reduce the risk of subsalt exploration and production.

Simultaneous sound source surveys are widely used on land and have been moving offshore to speed survey work. Etgen said the method delivered the two prerequisites for seismic advances: it delivered “higher quality data than you had before, without breaking the bank.”

Multisensor streamers are the latest addition to a long list of methods lumped under the heading of broadband seismic. They are generally aimed at gathering more of the ultralow frequency signals, as well as some hard-to-get higher frequencies. A broader range of wavelengths is valuable for determining the rock and fluid properties of a reservoir. Adding new types of sensors could also open the door to new subsurface imaging approaches.

There are other techniques that could make the list. Longer lasting battery-powered receiver nodes with multiple types of sensors inside are being used more frequently to create ocean-bottom arrays for detailed imaging that can track changes over time. Multiple industry partnerships are working on new offshore sound sources in hopes of creating a device whose output can be controlled to emit more of the scarce frequencies, and reduce the environmental problems related to seismic work.

Both present difficult technical challenges. The nodes could be used to create dense arrays like those used on land, but lower-cost methods are needed to accurately place them. Vibrating sound sources able to generate more valuable lower frequency is the target of multiple research partnerships, but has proven elusive.

While there is no predicting where these changes will lead to, Liner was optimistic about the potential payoff. “The change from narrow to rich azimuth exceeded all the advances experienced in 10 years of algorithm development,” Liner said. That was just the start, he said, “we are in a renaissance of seismic data acquisition, people will look back and say what a game changer that was.”

Multiplying Channels

While the pace of change in seismic is irregular, there has been a steady rise in the data collected. One measure is the number of marine seismic channels used on an average survey, which has followed the general rise in computer power, according to a chart from Monk.

A common feature in all the acquisition improvements is they generate far more data, requiring higher-powered computers and more efficient algorithms to turn all that data into useful information. For example, the first wide-azimuth survey gathered more than eight times the data of the conventional designs, Etgen said.

The processing power needed to handle the data gathered by Schlumberger’s new generation of multisensor streamers is so great that one feature that stands out in the vessels used for those surveys is the unusually powerful data-processing system on board.

“The data recorded is just mind-boggling,” said Craig Beasley, a Schlumberger Fellow and chief geophysicist for WesternGeco. A good example is the output of its most advanced multisensor streamer sold under the IsoMetrix brand. “There are more than 500,000 sensors in the array recording every 2 milliseconds. That is 500 samples a second per sensor and we will record 24 hours day if we can,” he said.

Progress in data acquisition only happens when what has been gathered can be processed using readily available equipment. “Acquisition and processing are really intertwined,” Beasley said.

At the heart of BP’s push to be a technological leader in seismic is the fact that its Center for High-Performance Computing in Houston is one of the world’s largest, high-performance computing centers for research. Since it opened in 2014, the company increased the center’s processing speed by more than 70% to 3.8 petaflops, each of which is 1,015 calculations per second.

BP uses its computers to simulate how well various survey methods would perform based on what is known about the subsurface. Schlumberger has created a group that evaluates how various seismic survey designs will perform in a given reservoir.

The need for simulation grows as the options grow. From a technical standpoint, simultaneous shootings gathered by use of multisensor receivers in a wide-azimuth design can have a cumulative benefit. But that is not a sure thing.

“If you combine them, you get some serious uplift. If used separately, you get some good uplift,” Liner said. “You want to do it all, but everything costs money. Everything has to be designed in. It doesn’t just happen.”