Once the stuff of science fiction, artificial intelligence (AI) has become ubiquitous in our daily life, and the modern oil and gas industry is no exception.

Artificial neural networks, fuzzy logic, and evolutionary algorithms are common among AI techniques being applied today in oil and gas reservoir simulation, production and drilling optimization, drilling automation and process control, and data mining.

“Today, when you talk to information technology (IT) people, they mention four trends: social media, mobile devices, the cloud, and big data,” said Reid Smith, manager of IT Upstream Services at Marathon Oil, and a fellow of the Association for the Advancement of Artificial Intelligence.

Social media are in everyday use for collaboration; mobile devices are proving valuable in field operations; cloud computing has the potential to deliver cost savings and increased flexibility and performance in networking and data management; and hyperdimensional, complex, big data are well suited for analysis by machine learning, which is a key element of today’s applications of AI.

In addition to a large existing volume of historical oil and gas data, today’s increasingly complex upstream environments generate vast amounts of data for which the value is greatly enhanced with cutting-edge IT. “Some argue there is a substantial amount of oil to be found by applying new analysis techniques to data already on the shelf,” Smith said.

It is important to distinguish between data management and AI. SPE’s Artificial Intelligence and Predictive Analytics (AIPA) group was previously a subsection of SPE’s Digital Energy Technical Section. Now it constitutes its own technical section, Petroleum Data Driven Analytics. Essentially, digital energy is the curating—gathering, storage, and generation—of data, whereas AIPA involves using the data to perform tasks without human intervention, said Shahab D. Mohaghegh, professor of petroleum and natural gas engineering at West Virginia University, and founder of oilfield consulting and software company Intelligent Solutions, Inc. (ISI).

“There is a push in our industry toward smart fields,” Mohaghegh said. “There is a misconception in our industry today that equates automation with intelligence. Just because an operation is automated does not mean that it is smart. Without AI, you may have automated fields, but you will not have smart fields. An automated field may provide the brain, but AI provides the mind. AI is the language of intelligence; it is what makes the hardware smart. To fully realize this, one must subscribe to a complete paradigm shift.”

A good modern example of the data-driven model outside the industry is credit card fraud detection and prevention tools that monitor consumer purchasing habits. “At ISI, that’s how we build reservoir management tools today,” Mohaghegh said. “We are now using the pattern recognition power of this technology to predict, manage, and design hydraulic fracture details in shale, using the hard data rather than soft data.”

Hard data refer to the field measurements that can readily be, and usually are, measured during the operation. In hydraulic fracturing, variables such as fluid type and amount, proppant type and amount, proppant concentration, injection and breakdown pressure, injection rates, and initial shut-in pressure are considered hard data. Soft data refer to variables that are interpreted, estimated, or guessed. Parameters such as hydraulic fracture half length, height, width, and conductivity, as well as stimulated reservoir volume are used as tweaking parameters during the history matching process and cannot be measured directly.

Reservoir Modeling

Uncertainty facing reservoir exploitation is high when trying to figure how a tight rock formation will respond to an induced hydraulic fracture treatment. Uncertainty quantification can be better achieved by making appropriate use of complex or hyperdimensional reservoir data through AI, for example enabling the optimization of fracture spacing and fracture design models.

Within a period of 6 months, Mohaghegh’s AI-based reservoir modeling software performed a 135-well history-matching analysis of the Marcellus shale, “which is unheard of because numerical models that are built for shale are overwhelmingly single well models and are computationally very expensive,” Mohaghegh said. The power of pattern recognition through AI is what enables this kind of speed in history matching. The modeling software is a hybrid system, combining artificial neural networks, fuzzy set theory, and genetic algorithms.

ISI focuses on reservoir and production management, optimization of hydraulic fracturing, reservoir simulation and modeling, and analysis, prediction, management, and reduction of nonproductive time during drilling operation, as well as real-time data analysis as applied to full-field modeling in smart field applications.

The reservoir management software, for example, functions in three stages: data mining, data-driven modeling, and model analysis. First, the data, which is often hyperdimensional, is preprocessed for quality control, and is mined to discover hidden and potentially useful patterns.

The first step continues with identification of key performance indicators (KPI) where the software determines the parameters that controls the outcome. The relevant KPIs vary from field to field, and there can be hundreds and hundreds of indicators for consideration on a given project.

Fuzzy logic serves the pattern recognition function of the software. “Fuzzy logic is multivalued logic. It is the logic behind the natural language. In our tools it is applied in the pattern recognition function of the software,” Mohaghegh said. “Artificial neural networking is the key to learning those patterns that have been discerned.”

Evolutionary algorithms determine patterns that are relevant for the particular field being modeled, by making predictions, evaluating those predictions, then discarding incorrect predictions. “Genetic algorithms are rooted in Darwinian evolution theory. The same natural approach to human evolution is applied to fracturing a well,” Mohaghegh said. “It is a learning algorithm, an ensemble.”

In the second step, the data is parsed into machine-usable form, which renders the reservoir model. The model is developed based on measured data from the field with little to no interpretation involved. “We let the wells and the reservoir speak for themselves and impose their will on the model, instead of imposing our current understanding of the geology and physics on the model. The model is then validated by testing it with blind data during post-modeling analysis,” Mohaghegh said.

“Data always comes from a specific field, a specific model, a specific situation. It is always field by field,” Mohaghegh said. “Our software is a workflow that takes you from the data you have for a specific operation to a data-driven model, and finally to the analysis of the developed model for decision making.”

As summarized in technical paper SPE 161184, the 135 Marcellus shale wells were on multiple pads with different landing targets, well length, reservoir properties, and completion details. The resulting smart-model can easily be stored on a handheld mobile device.

Production Optimization

Like reservoir modeling, complex data is the name of the game in production optimization. Unlike the more academic and speculative nature of reservoir modeling with AI, oil and gas production optimization software gets closer to delineating the real-time risks of critical production operations.

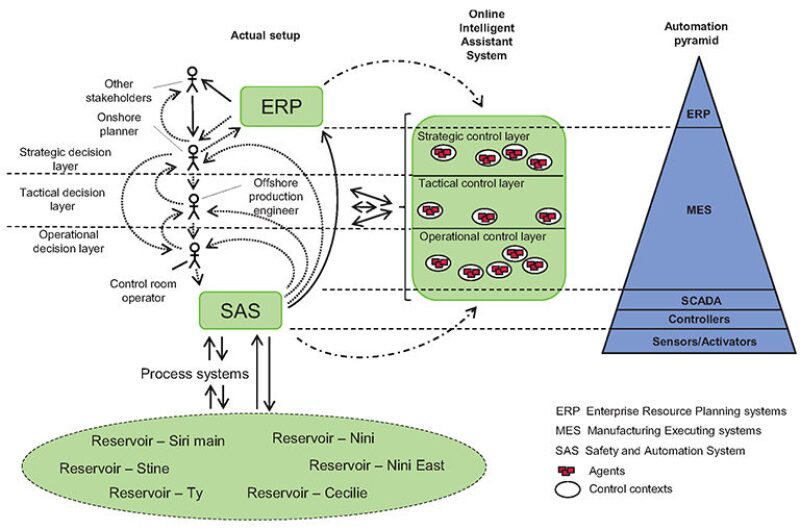

Operators of complex installations must focus on many tasks. Forgetting to start a pump, for example, may result in less efficient production. Multiple-objective control software in use at a pilot project in the Siri area offshore Denmark allows a level of operational control that can be handed over to the system. It does not relinquish critical functions such as emergency shutdown or fire suppression, but it does allow handoff of a significant level of control nonetheless, said Lars Lindegaard Mikkelsen of Dansk Olie og Naturgas Exploration and Production. Mikkelsen is a software engineer with a background in marine engineering, electronics, and offshore oil and gas automation.

“It’s a new layer on top of the existing control systems. We are not interfering with safety level. We are just adding a level on top of existing control systems,” Mikkelsen said. Today’s control systems should be used as the input source for this system, and configuration is an issue that should not be a problem, he said. “Software interfaces and programs exist, though older software tools could be more troublesome,” he said.

The stratified multi-objective control software is extensible, sizable, and can be run on a single processor or in parallel on a multicore server. The software architecture uses adaptive, self-reflecting genetic algorithm AI techniques. The software can be integrated with running systems of today to give direct information back to the system using closed-loop real-time feedback to optimize operational function.

The intent of the system is “to find the best possible solution (the approach is inspired by the economist Herbert Simon, who in 1956 introduced the concept of ‘satisficing’ as an approach to decision making), through multiagent software technology, and then using the genetic algorithm for the communication between the elements,” Mikkelsen said. The project is described in technical papers SPE 156946 and SPE 153815.

“We can right away increase production throughput. This is a step toward integrated operations because you have to make all your objectives explicit. We have an agent for each concern, and they are active all the time, for each part of the system,” Mikkelsen said. “We can let them run in parallel in small control contexts, which is very important, so we can add, update, and remove them from the running system.”

A multiagent system approach seems also to solve the challenge of rigid architecture and inflexibility of production software, he said. “Most modern control software is fixed and quite static. When we call a vendor, the slightest change in a control system today is very expensive,” Mikkelsen said. However, the genetic algorithm deployed in the software makes it customizable, so the operator can add and remove new concerns on the fly, and an offshore planner can just use the parts of the system relevant to their project. There can be any number of parameters incorporated, so the system is applicable on very large, complex fields.

“The oil and gas domain has a tendency to become more and more complex. Operators cope with this challenge of complexity all the time,” Mikkelsen said. “This is a way of approaching all these new fields with deeper water and greater volumes of data. And maturing fields as well. We have also a lot of brownfield applications, and even the brownfields are getting more and more complex.”

Particle Swarm Optimization Uses Animal Group Behavior in History Matching |

Particle swarm optimization (PSO) has two component methodologies, one of which correlates with artificial life in general, and to bird flocking, fish schooling, and swarming theory in particular. The other relates to evolutionary computation, and has links to both genetic algorithm and evolutionary programming. PSO is a method for nonlinear optimization of functions that uses a simple algorithm and was discovered through the simulation of a simplified social model, said Richard W. Rwechungura of the Norwegian University of Science and Technology’s Integrated Operations Center. PSO is based on a simple concept and is fairly efficient when combined with parameter-reduction methods such as principal component analysis (PCA), he said. Social sharing of information among particles offers an evolutionary advantage and this hypothesis was fundamental to the development of PSO. The PSO algorithm is initialized by a population of random candidate solutions, conceptualized as particles. Each particle is assigned a randomized velocity and is iteratively moved through the optimization/search space. It is attracted toward the location of the best objective function value achieved so far by the particle itself (local best) and by the location of the best objective value achieved so far across the whole population (global best). Rwechungura’s group used PSO for joint inversion of time lapse and production data to estimate porosity and permeability in the Norne field of the Norwegian Sea. Paper SPE 146199 describes the testing of a 2D semisynthetic model of the field. In paper SPE 157112, the same method is tested with 3D seismic data on the Norne field. “With parameterization using PCA, PSO yields acceptable results for our purpose in terms of these matches between observation and estimation, and also gives good distribution of the parameters to estimate porosity and permeability distribution,” Rwechungura said. “Unlike genetic algorithms, evolutionary programming, and evolutionary strategies, in PSO, there is no selection operation. All particles in the PSO are kept as members of the population through the course of the run. PSO is the only algorithm that does not implement the survival of the fittest and crossover operation.” |

|

Drill Floor Automation

In September, Norway’s Robotic Drilling Systems (RDS) signed an agreement with the United States National Aeronautics and Space Administration (NASA) to collaborate on the design of the company’s autonomous robotic drill floor control system, for which NASA contributes expertise gained from its remote interplanetary Mars rover missions, including the newest

rover Curiosity.

“Our need for the autonomous abilities started back in 2008 when our main goal was to make a subsea drilling rig for exploration drilling that had to operate fully unmanned,” said Roald Valen, control system manager at RDS. “We knew from the traditional industry where robots are being used in automated systems that without the autonomous abilities, there would be a lot of tweaking and tuning of the system to get it right. Together with the fact that on the drill floor there are too many variables changing, we knew that it would be close to impossible to get it automated, so we had to include autonomous abilities. We contacted NASA and the Jet Propulsion Laboratory, and we have now started implementing their knowledge and technology into our system.”

Around 2005, RDS came up with the idea of putting a rig at the sea bottom, and the subsea roughneck system was finished in 2008. In 2010, a prototype of an automated robotic drill floor was successfully demonstrated to trip pipe in and out of hole without human interaction and has been demonstrated several hundred times since then.

“If you go from manual work to automated work, then the next step is going for complex automated systems. Today our drill floor mainly consists of remotely operated machines requiring humans to supervise and control the activities and therefore also be the key factor in regard to the performance of the drill floor,” Valen said. “A good team can perform great while other teams might do a poor job, but for any processes involving humans, there is a chance of human error. Machinery may stop due to technical faults but it may also stop due to being used wrongly. By introducing autonomous abilities in our products, we will limit the chance of human error and get a more reliable system. The first reason to have the autonomous function is to prevent the big stops, the critical stops.”

Since 2010, RDS engineers have been asked to focus on topside rigs, and are now working on robotic machinery for topside use.

Measured on a 1-to-10 scale of machine automation vs. autonomous decision making, with 1 being the human performing every task, and 10 being the machine doing everything, the RDS rig would rank somewhere in the middle, Valen said.

“There is one thing I learned at a seminar at Johnson Space Center. The astronauts don’t want the system to fully take control. They want to be able to interact if necessary,” Valen said. “The operator needs to feel he or she is in control of the process. At least until the operator trusts the system.”

There is a learning curve for AI automation in the oil and gas industry and there is room for additional innovation. Valen compared it to the era when automobiles were first being equipped with antilock braking technology, but the technology was not yet perfected.

Past, Present, and Future

“The oil and gas industry has been on the leading edge of applying computer technology from the beginning,” Smith of Marathon said. “The industry got into the field of AI early in the game, back in the 1970s, 1980s, and 1990s. Today, AI technology is part of the standard IT tool box, and it is being applied all over the world.”

Different segments in oil and gas, such as maintenance, procurement, subsurface, and topsides operations, historically have been compartmentalized, working independently of one another. Those boundaries are becoming less defined because of the trend toward more integrated, intelligent operations. AI itself has an interdisciplinary nature, with its specialists coming from a variety of backgrounds that include neuroscience, signal processing, mathematics, statistics, and computer science.

One of the goals of AI in upstream operations is personnel strategy optimization to minimize risk to people. Supply chain optimization is another area where AI has excelled and been widely applied over the years in other industries. It may find application in new plays such as the Eagle Ford shale, where development is at a different scale and pace in terms of the number of wells drilled, he said.

The boundaries among the up-stream disciplines have become less defined, but an integrated, intelligent system dealing with the total exploration and production operation has not yet emerged, Smith said. “However, we have all been surprised by how far you can get with big data, coupled with the relatively simple models. We can see this in action today with online translation and speech recognition systems. I expect that trend to continue to play out in industries that depend on large amounts of data, and ours would be one of them,” he said.