As projects increase in complexity, the chances for human error have also gone up, and the consequences of error have become more significant. An expert argued that a lot of human error stems from a lack of awareness of what is happening in difficult and dynamic environments. This awareness, the expert said, is necessary for making good decisions.

At a joint forum (“Human Factors to Support Safer and Effective Offshore Energy Operations”) hosted by the Ocean Energy Safety Institute and the Human Factors and Ergonomics Society, Mica Endsley examined the role situation awareness plays in drilling operations, as well as the ways in which system design can help improve situation awareness in a work staff. Endsley is the president of SA Technologies, a cognitive engineering firm, and is the former chief scientist for the US Air Force.

The Basics of Situation Awareness

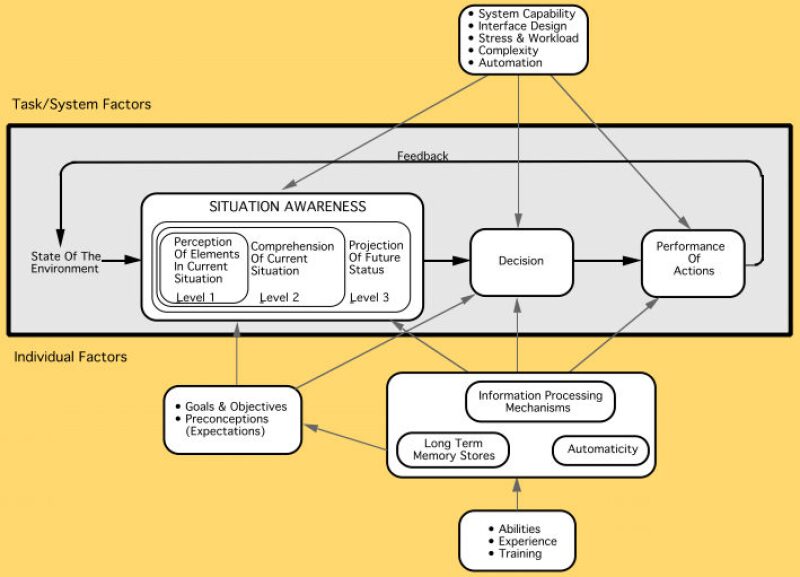

Defined as the perception of elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future, Endsley said situation awareness is critical for effective decision making. Onsite workers with strong situation awareness can often anticipate issues that may arise with a project, or the effects of activities on safety.

“When we look at where people screw up and where they have problems doing things, they’re often doing the right decision for what they think is happening, but they have the wrong picture of what’s going on. If you want to solve this human performance with checklists and procedures, that’s great, but there’s still this whole problem of going down and understanding what’s the situation or what procedure will be employed at this time,” Endsley said.

Fig. 1 shows a cognitive model of situation awareness that focuses on how the human brain gathers and processes information. In order to keep themselves from being overwhelmed when looking at information, people develop mental models of how their environment works, which allows them to have a basis for reading data and understanding how it integrates with the larger part of an operational system. They also develop schema, recognized classes of situations that often form the basis of decision making.

Goals are also a key factor to situation awareness. Some sectors of an organization may be focused on performance, others on safety, and Endsley said those goals can sometimes conflict. She said there is an ongoing struggle between goal-driven mental processing and data-driven processing that affects overall performance. A data-driven person may be great at reading data but inefficient at interpreting data, or may not be focused on the overall mission for a project. A goal-driven person may be great at interpreting data but inefficient in finding the right data to interpret.

Endsley said a person’s goals in the organization typically depend on the role that person plays in the organization.

“Your goals are really defined by who you are in the organization,” she said. “Are you the driller? Are you the drilling engineer? Are you the guy out there on the deck who’s actually a logger? What is your role in the organization? Those roles and those goals are important in determining what information you find important, how you interpret that information, and what projections you’re trying to make.”

“Demons” and Other Obstacles

Besides the fluctuation of goal-driven and data-driven processing, organizations can also suffer when workers have inadequate mental models for their environments. Endsley said inadequate models can derive from a number of situations, from a lack of experience within a company to the introduction of automation in a system. If a worker does not have a solid mental model, he or she will not be effective at projecting outcomes.

Endsley listed several environmental factors, dubbed “SA Demons,” that make situation awareness difficult to accomplish. One such barrier is attentional tunneling, where an individual fixates on one set of information to the exclusion of all others. Another demon is the requisite memory trap, in which individuals forget vital information due to an overworked working memory.

Humans are also prone to error-making because of workload, fatigue, and other stressors (WAFO) because of their limited working memory. Endsley said that a person can get fatigued easily if he or she tries to process too much information at once, and additional stressors can constrain that memory even further and diminish attention span.

“People get less efficient at gathering information,” she said. “They will just gather a few bits and then move on to the decision instead of gathering all the information that they should, so the attention-gathering is kind of scattered. It’s not as systematic. You get this premature closure.”

Endsley said the best way to combat WAFO is to develop consistent habits for stressful situations rooted in long-term memory, thus eliminating the need for workers to rely on their limited working memories. She used fire drills as an example of a habit-forming exercise: Under stress, it is easier for people to react appropriately to a fire by pulling the proper protocol from their long-term memories.

Data overload is another common situational awareness demon that happens in situations where the volume of information coming from a system is high and rapidly changing. Endsley said that companies can help with this by altering the data flows coming into information pipelines, making them more manageable for workers.

Misplaced salience is a situation where a system design draws worker attention toward less important features of that system. Endsley said the human mind can be easily manipulated by potent stimuli like bright colors, lights, movement, and loud noises. If those stimuli are misleading, they can lead a worker to ignore important data and possibly miss the warning signs of a brewing incident.

“If you load up a webpage and that thing’s jumping up and down, it takes a lot of energy to focus on the stuff you need to be reading and the stuff you need to be looking at,” she said. “We have to make sure that the kinds of features that you make salient on your displays and your alarms are really the most important things, not the 16 things that someone else thought were most important that you have to fight through and figure out which one is which.”

Complexity creep is the difficulty workers face in developing a good mental model of the workings of increasingly complex systems, as well as the adoption of complex systems for the sake of adopting them. Endsley said companies incorporate these systems without fully understanding how they can improve operational efficiency, which can lead to inefficient usage: Workers only use only small subsets of the system and often misinterpret how those systems are working.

“We do this to ourselves,” Endsley said. “We say, oh, I want to buy the system that has 15 features instead of 12 features because 15 must be better than 12. That escalates and escalates until we have all this stuff but we don’t know how to work any of it.”

Out-of-the-loop syndrome involves a low situational awareness on the state and performance of an automated system. In this situation, workers are slow to detect problems with a system and, when a problem occurs, they are slow to regain enough of an understanding to manually override the system.

Endsley said this problem is directly related to complexity; by introducing more complex automated systems, companies fundamentally alter the awareness of the workers interacting with the systems, shifting their workload from manual tasks to cognitive monitoring tasks. She said humans often are not good monitors because the mind is not fully capable of handling long periods of continuous vigilance—the mind loses vigilance after about 20 to 30 minutes.

“Even when people are monitoring stuff, when they’re actively looking at it, they are still slower to understand what’s happening and they still don’t have that same understanding as if they were manually doing it themselves. Sometimes what’s happened is that the designers designed out the information. Other times it’s just that the data are all there but you don’t understand it as well. We’ve turned people from active processors to passive processors,” she said.

Improving Design and Communication

Endsley said projects have an inherent technology problem, primarily caused by the piecemeal installation of disparate system components on-site. Workers transitioning to automated computerized systems are often left to figure out the intricacies of these components, running the risk of mental overload. This overload may cause an information gap, in which workers fall behind in processing the data developed by the automated systems, and that gap ultimately leads to mistakes.

“We have lots of technologies that are thrown in there and we make the human adapt to that,” she said. “That’s one of the major flaws, because people can only adapt so far. The end result is human error, because they’re the last person in the chain.

To help combat the information gap, Endsley said companies should develop user-centered design philosophies, taking all its available data, integrating, and processing it in ways that can be quickly and easily disseminated by decision makers. She said such a design philosophy better fits human capability.

“We can improve safety, we can reduce injuries, the users are actually quite happy with that because they’re tired of having to fight across all these systems to get what they need, and we can really improve productivity. To me this is really a win-win. It’s a win for product safety, it’s a win for productivity and performance if we can do this sort of thing effectively,” she said.

Endsley said shared situation awareness is an important component of the successful functioning of collocated and distributed teams within an organization. Companies need good shared situation awareness tools to communicated key information and help make sure that everyone develops the needed understanding of the significance of any data. These tools, she said, need to be carefully tailored to the needs of different team members and flexible enough to support changing priorities.