In my introduction to the February 2013 column about heuristics and biases written by Dale Griffin and Thomas Gilovich, I invited readers to comment on the conflicts between their school of thought and the naturalistic decision-making perspective presented by Gary Klein in the February 2012 column.

John Boardman and Joe Collins took me up on the invitation. We traded a few emails on the subject and the final result is the article below, followed by my response to a couple of points on which we do not completely agree.

Howard Duhon, GATE, Oil and Gas Facilities Editorial Board

Authors’ Disclaimer: We are engineers, not psychologists or behavioral economists. Our views are based on our experiences over the course of more than 40 years in the upstream oil and gas industry. Our fascination with risk and uncertainty and our subsequent enquiry into the role of psychological factors, biases, and heuristics in the industry was spurred by an iconic SPE paper by Ed Capen (1976).

Our knowledge and understanding of the influence of psychological factors is influenced by the works and publications of Daniel Kahneman, who was awarded the 2002 Nobel Prize in economic sciences and is notable for his work in the psychology of judgment and decision making, behavioral economics, and hedonic psychology. With Amos Tversky and others, Kahneman established a cognitive basis for common human errors that arise from heuristics and biases (Kahneman and Tversky 1973). We have also been influenced by Richard Thaler, distinguished service professor of behavioral science and economics at the University of Chicago’s Booth School of Business, who is a theorist in behavioral finance and a collaborator with Daniel Kahneman, and Stephen Begg, the head of school and professor of petroleum engineering and management at the University of Adelaide in Australia. His research and teaching address decision making under uncertainty, asset and portfolio economic evaluations, and the effects of psychological and judgmental factors on the above.

To the extent that our layperson interpretations do not accord with the experts, the (mis)interpretations are solely our responsibility and in no way reflect on the quality work conducted by the authors cited.

We have read with interest the columns by Klein, and Griffin and Gilovich. Contrary to the view of Duhon, we see no conflict between the views expressed by the respective authors. We do, however, believe that Klein is ill disciplined in his use of “experience” and “expertise.” In most instances in which he wrote “expertise,” he was referring to experience.

We are comfortable with the reality of swift intuitive judgement being the primary decision-making modus operandi of experienced engineers in emergency situations. It goes without saying that the more theoretical knowledge and practical experience possessed by the decision maker, the better his or her intuition.

Incidents, such as the Macondo blowout in the US Gulf of Mexico in 2010 and the uncontrolled release of oil and gas from the Montara wellhead platform in the Timor Sea in 2009, caused us grave concerns that both are the portents of the possible consequences of the “great crew change.” In the coming decade, 50% of experienced personnel of international oil companies industrywide are expected to retire. Responsibilities at Macondo and Montara devolved to less experienced personnel compared with the levels historically enjoyed by the industry. (For example, computing skills may be brilliant, but the intuition and the ability to quickly form a mental picture of the escalation of abnormal events is lacking.) In situations requiring immediate decisions, we do not have the luxury of time-consuming evaluation and consensual decision-making processes. We want someone who “knows” what to do and trust that he or she has had sufficient life experiences so that the unconscious pattern matching, heuristics, and gut “feel” that is used will prevent a catastrophe.

By contrast, in a noncrisis situation, the environment that characterizes most of our lives, these heuristics, biases, and other psychological factors do us a disservice. The effects noted by Griffin and Gilovich have also been documented by other authors. It appears to us that psychologists, behavioral economists, and academics are well aware of these factors, which are generally acknowledged as fundamental characteristics of our behavior.

The problems are:

a) Few people in the upstream oil and gas industry, particularly project teams, are aware of these behavioral issues.

b) The psychologists, with few exceptions, seem content to identify the existence of these behaviors, but are not motivated to help us redress the consequences.

From our own work and those of others, it is an irrefutable fact that the majority of large exploration and production (E&P) projects fail to satisfy the budget and schedule approved at the final investment decision (FID) (Fig. 1).

Although there may be unique reasons associated with each project, we believe that the underlying root cause of large overruns is the failure to adequately identify and manage the risks and uncertainties that characterize the project.

Despite, or perhaps even because of, increasing levels of sophistication in project planning and management over the past 40 years, individually and collectively, project engineers are prone to a number of psychological effects that lead to over-optimism and disregard of risks.

Based on research by Begg and Bratvold (SPE paper 116525) and the firsthand industry experience of RISC, several dominant cognitive biases, heuristics, and other psychological effects have been identified that significantly affect major project costs and schedule.

To materially improve capital management requires enhanced recognition and acceptance of limitations in personal knowledge, psychological effects, and insightful risk identification and improved risk management.

Our central thesis is that if the risks that characterize activities are better identified and managed, the final cost will be lower and schedule shorter (Fig. 2).

The addition of greater contingency is not the objective.

We have identified a number of reasons for project overruns, including typical human behaviors, such as

- Confusing accuracy with confidence as information increases.

- Believing that sophistication reduces risk.

- Underestimation of the time required to complete tasks, such as drilling a well, laying a pipeline, and commissioning a plant. Schedule delays can cause large cost increases, because of the high cost of specialist installation and commissioning personnel and equipment.

- Scope changes because of poor system definition, lack of rigor in gated process, poor project/operations communication, and preferential engineering.

- Ignoring dependencies and interdependencies.

- Poor risk management, lack of contingency plans, and ineffectual contractual protection.

- Inadequate project management, ineffective interface management, poor communication, and low resource utilization. A common denominator found in these factors is that cognitive biases and heuristics lead to over-optimism and disregard of risk. The psychological effects we observe as being most likely to be prevalent in project teams are:

- Overconfidence effect—“We are 90% certain that it will cost...” Studies show that people’s confidence consistently exceeds accuracy, or people are more sure that they are correct than they deserve to be.

- Superiority bias—“We can do it better than anyone else.” This bias causes people to overestimate their positive qualities and abilities and to underestimate their negative qualities relative to others.

- Optimism bias—“That won’t happen to us.” People believe that they are less at risk of experiencing a negative event compared with others.

- Anchoring—“But it used to cost less.” This occurs when individuals overly rely on a specific piece of information to govern their thought processes. The first information learned about a subject can affect future decision making and information analysis.

- Hindsight bias—“We know what they did wrong.” The inclination to see events that have already occurred as being more predictable than they were before they took place.

- Planning fallacy—“I can do that in no time.” A tendency for people and organizations to underestimate how long they will need to complete a task, even when they have experience in the overrunning of similar tasks.

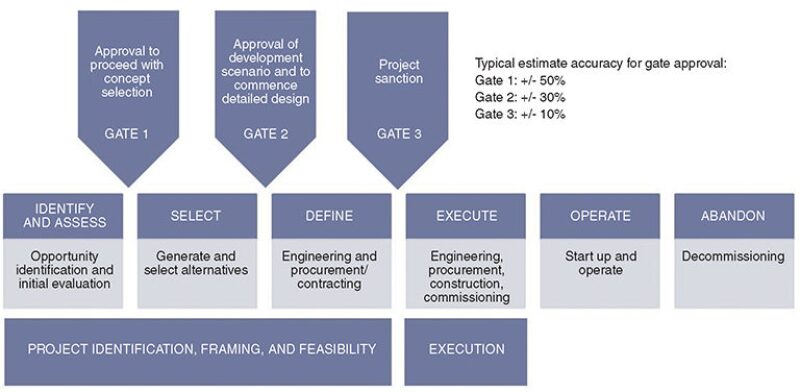

We have also identified a motivational bias, which we believe leads to an implicit denial of the risks that characterize the project development. If one does not recognize that a risk exists, it is impossible for it to be effectively managed. The motivational bias arises from the way in which the gates in a typical gated process are managed (Fig. 3).

In most organizations with whom we work, each gate of the project development cycle has a set of prescriptive criteria that must be satisfied before the project can progress to the next phase. At the FID gate, it is generally a requirement to satisfy cost and schedule estimates within a range of +/-10%, or thereabouts. Because project engineers are motivated to “go build projects,” instead of pushing paper, and because they know that they will not be allowed to build until they have satisfied the estimating accuracy criteria, what range do you think they will derive? The problem is that once the cost estimate is represented as having an accuracy of +/-10%, it is difficult to justify expenditure of resources and money to develop contingency plans for events that have the potential to lead to overruns of 20%, 30%, or 40%.

We question the credibility of cost estimates for many of the large E&P projects, which are increasingly characterized by environmental constraints, landowner issues, social license conditions, and inexperienced or noncompliant workforces, and which continue to push technological boundaries.

A one-size-fits-all gate drives the wrong behaviors.

Based on our observations and experience, we make the following assertions:

- Cognitive bias prevents the project team from generating credible estimates of the range of uncertainties that characterize the project. Discipline experts are overconfident and also driven by company policy to provide estimates that are too tight.

- Probability analysis generally excludes low probability, high impact events from consideration. This leads to an underestimation of the range of uncertainty. “Black swan” events occur rarely, but have high impact, and are likely to occur when a megaproject is made up of many hundreds of small projects spread over 5 years or more.

- Not enough effort is put into getting “unknown unknowns” onto the project risk register. This type of risk is more likely to occur on highly complex, first-in-class projects. Risk registers are generally compiled by discipline experts in the project team. These engineers draw on their experience and knowledge. By definition, an unknown unknown will not have been experienced previously. The majority of the experts will have similar experience and similar thought processes by virtue of their training and positions. Little innovative or outside-the-box thinking can be accomplished by such a group. In this type of culture, the situation is exacerbated by the rejection of thoughts and ideas perceived as coming out of left field.

In conjunction with Begg, we have developed a number of practical process initiatives that project teams can put in place to increase the reliability of FID cost and schedule estimates and, most importantly, to more effectively manage risks to reduce cost overruns and project slippage.

The key elements of this process are:

- Explanation of cognitive biases, heuristics, and psychological effects and demonstration of how individually we have them.

- Identification of those biases most prevalent in engineers and project teams.

- Demonstration of the likely effects of these biases on FID estimates of cost and schedule.

- Reaching agreement on personal and collective approaches that ameliorate the effect of these biases, recognize more of the unknown unknowns, and identify effective risk mitigation strategies.

- Defining a process to aid in bias recognition and mitigation, identification, monitoring, reporting of key risks, and providing feedback loops for continuous improvement. OGF

For Further Reading

Capen, E.C. 1976. The Difficulty of Assessing Uncertainty. J. Pet Tech 28 (8): 843–850.

Haidt, J. 2012. The Righteous Mind: Why Good People are Divided by Politics and Religion. Pantheon.

Kahneman, D. and Klein, G. 2009. Conditions for Intuitive Expertise: A Failure to Disagree. American Psychologist 64 (6): 515–526.

Kahneman, D. and Tversky, A. 1973. On the Psychology of Prediction. Psychological Review 80 (4): 237–251.

Mercier, H. and Sperber, D. 2011. Why Do Humans Reason? Arguments for an Argumentative Theory. Behavioral and Brain Sciences 34 (2): 57–111.

SPE 116525 Systematic Prediction Errors in Oil and Gas Project and Portfolio Selection by S. Begg, University of Adelaide, and R.B. Bratvold, University of Stavanger.

Finding Value in Biases and Heuristics

and

Naturalistic Decision Making

Howard Duhon, GATE

Boardman and Collins claim that a common denominator in the factors leading to project overruns is that cognitive biases and heuristics lead to over-optimism and disregard of risk.

I have long been a fan of the work of Amos Tversky, Daniel Kahneman, and others in the psychology of biases and heuristics, but I am also a fan of some people who disagree, including Gary Klein and other proponents of naturalistic decision making (NDM). I think that many people misinterpret the NDM work and conclusions. Although NDMers study decision making by people in highly stressful situations, that does not mean that their findings apply only in those situations.

We do not face high levels of stress on a routine basis; however, all decisions involve some level of stress. Engineers doing design calculations face deadlines and budgets. Many of our decisions are made intuitively by engineering judgment; it cannot be any other way. There is not enough time to exhaustively evaluate every problem—we must pick our battles.

A better way to distinguish between biases and heuristics vs. naturalistic decision making was discussed by Klein and Kahneman (2009). They distinguished between high-validity environments and low-validity environments. They asked and answered the key question: “Under what conditions are the intuitions of professionals worthy of trust?” The paper concluded that two things are required:

- An environment of high validity (stable relationships between cues and subsequent events) is a necessary condition for the development of skilled intuitions.

- Opportunity for routine valid feedback, so that the expert can learn valid lessons from the environment.

The oil field is a high-validity environment. Engineering design is a high-validity environment. Experienced engineers and operators frequently come up with the right answer to a complex problem with limited information. Engineers make many decisions naturalistically, using intuition and engineering judgment. So one would expect that the findings of the naturalistic decision-making school of thought would be more valuable to engineers than those of the biases and heuristics school.

Where biases and heuristics researchers view heuristics as sources of bias that lead to error, naturalistic decision making sees these same heuristics as sources of strength that allow us to make rapid judgments—judgments that are usually correct. Naturalistic decision making focuses on methods to improve judgment in stressful, data-limited environments.

I am not arguing that we should choose to follow either biases and heuristics or naturalistic decision making. Both have value. The ideal situation would be that a) our heuristics work well most of the time, and b) we identify and correct the errors they occasionally create.

There is reason to believe that individuals will perform poorly in the identification and correction of their own errors. In this respect, I would like to call the reader’s attention to an important line in Boardman and Collin’s article that is easily missed. One of their suggestions includes the phrase: “Reaching agreement on personal and collective approaches which ameliorate the effect of these biases...”

Identifying collective approaches to mitigate biases is a key point. One might assume that people trained in the ways of heuristics will use reason to correct the errors that result from intuition. Research by Mercier and Sperber (2011) suggests that this is not the case—individual reasoning usually makes people more certain that their intuition is correct. The evidence in their research is that individuals working alone do not typically correct their own biases. Reasoning can correct our biases, of course, but it usually has to be the reasoning of others.

So the ultimate answer seems to me to be:

- Teach individuals to use heuristics more effectively.

- Train teams to effectively root out and correct the biases in their teammates’ intuitions.

John Boardman is the vice chairman of RISC. He has more than 40 years of international experience in the upstream oil and gas industry spent with several major companies in Europe, the Middle East, Africa, and southeast Asia. He founded RISC in 1994 as an independent advisory firm integrating E&P technical and commercial disciplines by assisting clients to make more judicious business decisions with greater confidence. Boardman is the SPE Regional Director of Southern Asia Pacific. He is an SPE Life Member and an Ed Capen disciple. He can be reached at johnb@riscadvisory.com.

Joe Collins joined RISC in 2012, conducting due diligence on cost and schedule estimates for E&P companies and banks. He has 9 years of experience as a chemical process engineer. He started his career in upstream exploration as a field engineer before moving to the downstream industry and working in petrochemicals as a process engineer. He can be reached at joec@riscadvisory.com.