The execution of process automation projects often requires the completion of tasks that are not necessarily related to automation, with relevant data being held in multiple places, requiring manual revisions that have a high potential for errors. Industry is looking toward the digitalization of project workflows to help minimize the issues that come with project execution, and automation solutions such as digital twin and flexible automation networks are being seen as a means for enabling faster project delivery along with more complete testing and simulation at earlier stages of project development.

Shaun Johnston, global director, process solutions at Wood Automation and Control, said organizations have been, to some extent, victims of the energy industry’s demands. Operators often execute projects on a global scale, requiring the use of multiple offices, specialty centers, fabrication centers, and the like. On top of that, the list of standards and regulations by which companies must operate (health, safety, security, and social responsibility regulations, both intercompany and government-issued, as well as other procedural standards) has grown at a speedy rate.

Speaking at the inaugural SPE ENGenious Symposium in Aberdeen last month, Johnston said these standards have become more stringent, putting them “almost in complete conflict” with the industry goals of efficient project execution amidst tighter operational budgets.

“As technology moves forward, there’s always that gap between what it can do and what we can make it do in service of the project. Terms like ‘death by a thousand paper cuts’ probably apply here. Maybe the number of systems you’re spooning into an automation piece is just increasing and increasing. It’s not obvious at the onset how difficult that’s going to be,” Johnston said.

Despite those challenges, Johnston discussed how process simulation can provide a roadmap for more efficient project delivery.

Separating Hardware From Software

In traditional network devices, hardware and software are inextricably connected to each other and are often provided by the same supplier. Traditional routers, switches, load balancers, and firewalls are optimized to handle traffic as efficiently as possible, but because the hardware and software are linked, operators effectively have to use a supplier’s corresponding software in order to configure and operate the hardware. However, the past couple of years has seen a shift toward open and programmable networks, led by the emergence of software-defined networking (SDN), where the hardware is decoupled from the software.

On a software-defined network, the software used to administer and control the network equipment is no longer located on the equipment itself, but instead in a separate location. Routers and switches are only dedicated to receiving and transmitting network traffic.

SDN allows for parallel workflows among offices: hardware can be delivered to a site while the software stays at another office. Whereas previously a company might build a comprehensive testing system on one specific part of a project and then run procedures to test that part, Johnston said they can now run multiple comprehensive testing systems simultaneously for longer periods of time.

“We can now script up a load of tests, spend all the time scripting up the tests, and when the launch is ready we can run the scripts a lot quicker than what [previously] would have been done, in an automated fashion—overnight hopefully if you’re not in the office. You get an automatically generated test script and test documentation, so whatever you find, you know where it is and what needs to be fixed,” Johnston said.

Johnston spoke about Shell’s “Flawless Project Delivery,” a concept borne from risk management and edge statistical analysis to help improve testing outcomes. The concept operates on three primary ideas: that all projects have flaws, whether in the organization, personnel, or in the assets; that most flaws present themselves in the startup phase; and that many flaws are similar and repeats of other projects.

The origin of the bulk of these flaws--which Johnston said could range from poor or inoperable systems and equipment to the non-recognition of complex systems—happen either in the basis of design phase or during the detail design/procurement phase, prior to startup, but they do not get noticed until later. Johnson said they are the status quo in industry. A company will sign a contract to deliver a project, design an operating system for the project, run system testing and process simulation as a batch, and hand the system over to the operator, who will then build out a high-fidelity process model that effectively becomes the cornerstone of its operation. But if flaws remain unnoticed until the last project phases, they could lead to significant delay and cost overruns.

Process simulation brings value to a project, Johnston argued, by allowing companies to utilize virtualization to test major components in a project early (such as Smart I/O and fabrication software), effectively creating a “process digital twin.” Virtual testing could make it easier for operators to run more rigorous testing of their systems, potentially reducing the number of errors in a system prior to the factory acceptance testing.

The digital twin is a complete and operational virtual representation of the system that combines digital aspects of how a system is built along with real-time aspects of how it is run and maintained. Johnston said it can help operators leverage each stage of project development by effectively doing everything at once, developing the high-fidelity process model as they test the control system architecture. It can breed greater familiarity with a system and help build operator competency, reducing the potential for system errors at startup.

“The system is testing effectively 30% to 40% of the building operation. We’re getting all the control systems, all the logic, and you’re putting in a low-fidelity process model, normally in bits, putting it together one part at a time, and running it. You put all that together and you’ve effectively got a high-fidelity model. You can actually start the system up and have it start up the way it’s expected to start up. You can actually start testing out something like virtual reality and testing out field operators in a control room setup so that you have awareness of the asset,” Johnston said.

The Virtual World

In another presentation at ENGenious, Joerg Schubert, technology manager for the oil and gas and petrochemical business unit at ABB, said that digital twin is a critical component of its Intelligent Projects concept, in which it builds and tests each modular component of a project individually prior to final assembly of an operational system. If advanced service parameters such as Internet of Things (IoT) connectivity and analytic algorithms are preconfigured in the digital twin, an operator can enable them as soon as the equipment is installed, with no further engineering required.

Automating data management processes eliminates the manual steps needed to complete a project. Schubert said Intelligent Projects involves the conversion of data from many sources into a common engineering format, which simplifies the design application process. Different data points and information from the physical object can be available to any user. To that end, he said the automation system needs to support the digital twin concept to cover the full project lifecycle.

“This has to somehow deal with the real physics, and to get there, we have tried to come up with a solution where we’re trying to get all the different aspects that you have on a physical element in the field and model it on the automation side. So the automation side is not just getting a single picture of one aspect that you already have in the field. It already includes all the different aspects from an operations point of view. It exposes that information in a filtered way so that you don’t have information overload,” Schubert said.

Schubert discussed the recent paradigm shift in computing to the software marshalling of input/output (I/O), or the communication between processing systems and the outside world. Marshalling is the process of transforming the memory representation of an object into another format in order to make it suitable for transmission to another software application. Previously the topology needs of a distributed control system (DCS), a system in which autonomous controllers are distributed under a central operator supervisory control, affected the hardware build process and caused dependencies between the hardware and individual software applications, but fieldbuses and flexible Ethernet-based I/O could decouple those dependencies.

Despite the advantages of flexible I/O systems, Schubert said they may not be ideal. He said it may be preferable for operators to connect the devices in a facility directly through the field bus network into the automation system while separating the hardware and software, breaking the dependencies while maintaining the “richness of information” coming from each device.

“We’re talking about data analytics. We’re talking about big data and all the measurements from an analytics point of view to optimize our automation solutions, and that data has to come from somewhere. If we block certain communication lanes, or build redundant communications, we don’t think that’s likely to go anywhere,” he said.

Simulation and emulation are critical elements to developing an intelligent project. Schubert said that the earlier an operator can bring process simulation into the design, the earlier it can determine possible flaws within that design, and it is important when separating the hardware and software to have a mechanism that properly emulates the physical hardware. Cloud computing helps accomplish these goals.

Schubert said virtualization gives operators the chance to execute a project while not being tied to a specific location, making standard designs, engineering workflows, and support tools easily accessible to each team within a project. By tying virtual components of a project into the physical hardware of a facility, operators are effectively running automation engineering in the cloud: Schubert said they can take components that are simulated in the cloud to a physical location, run acceptance testing, and carry those results back into the system.

Modular Automation

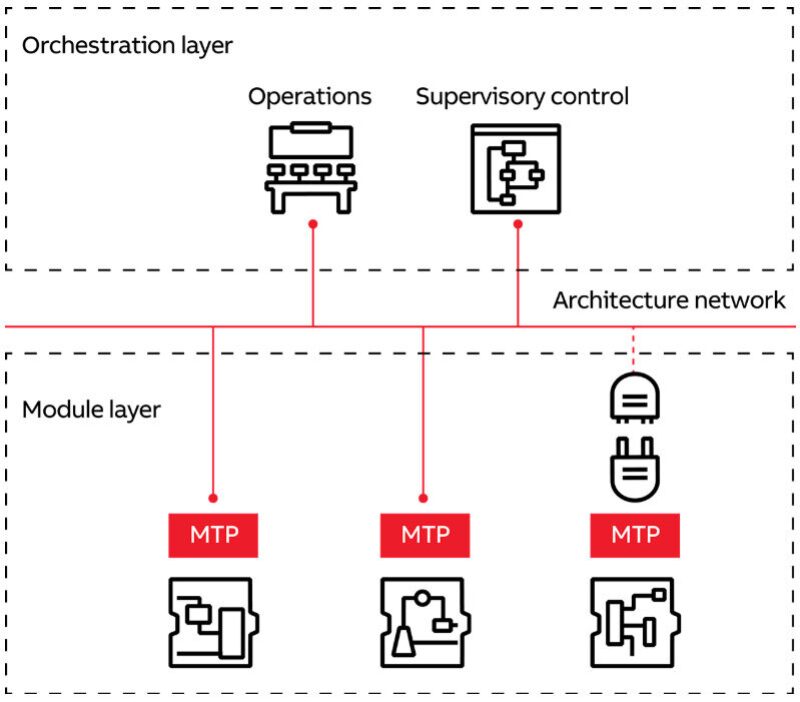

In June, ABB launched the first modular enabled process automation system, combining an orchestration layer and a module layer integrated with the technology of module type packages (MTPs). The orchestration layer is a combination of operations and supervisory control of the modules. An open architecture backbone links the orchestration layer to the module layer with communication via OPC Unified Architecture, a machine-to-machine communication protocol for industrial automation.

An MTP is a methodology that standardizes the interface between the control level and the module. These packages contain the necessary information to integrate a module into a modular facility, including communications, human-machine interfaces, and maintenance information. A DCS could then evolve to process orchestration systems that manage the operation of the modular units.

Schubert said that fully modular automation systems are currently more popular among chemical companies—some of whom are seeking to assemble entire facilities using these systems—and that oil and gas companies will likely not embrace them to such a degree. However, he said the industry is moving toward a model where it builds packages with limited automation that can be integrated into the larger project. Emerging modular automation standards, he said, allow for more efficient subsystem automation.

“There has to be a way to get these package units integrated in a smarter way. From a consuming side, on the automation, it doesn’t matter who is delivering it. All you need to know are the certain functions that this package gives you. It has a bunch of inputs and outputs, and it has a description of what it does. It comes in a descriptive form that you can input into the automation solution, but when that package comes, the built-in logic is just functioning and can also interact with the rest of the system,” he said.