The software industry is growing rapidly, and companies are creating and deploying products to help accomplish everyday activities and strategic goals. There is a constant need to evaluate “buy vs. build” to support optimal operational performance. This is crucial for the oil and gas sector; the choice of the right software serves as a key decision point.

In the oil and gas sector, most organizations still rely on ad-hoc or subjective techniques to assess software. Each software option has its own advantages and disadvantages, and the final choice is affected by multiple aspects such as cost, organizational goals, the learning curve, the satisfaction of users, and whether the application meets the requirements of business processes, cultural fit, and technical environment.

Such data is subjective in nature. The way it is presented, and the opinion of an individual, can influence the selection for a particular task, which may not always be the best fit. Stakeholders often have different priorities and requirements that need to be met, and ensuring all critical voices are heard but do not overrun one another is difficult and highly dependent on the people involved.

There is limited structured framework for analysis explicitly designed for the oil and gas industry and the specific requirements organizations require. Closing this gap is vital because incorrect software choices can lead to significant operational disruptions and cost overruns and prevent the achievement of strategic goals.

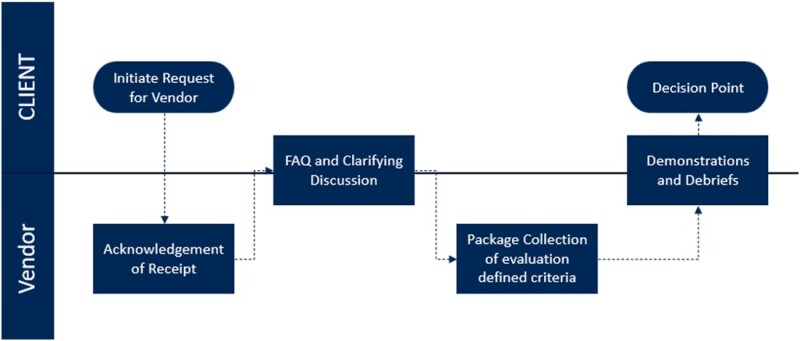

Through a tactical project management approach, we identify key stakeholders who need to be engaged and form a project committee and objectives, roles, responsibilities, and timelines are clearly documented and communicated. The process continues to unfold across a series of structured phases: defining evaluation criteria; creating and issuing request-for-information (RFI) packages; conducting Q&A sessions; executing evaluations based on standardized demonstrations; holding debrief sessions; and finally, issuing an informed decision based on quantitative and qualitative inputs.

This systematic approach provides organizations with the capability to choose software that truly matches their strategic ambitions without the risk of selection bias and failure in implementation. The industry currently has no standardized common ground for how to approach software evaluation, and this framework offers a repeatable, data-driven methodology for application.

Evaluation Criteria Library

The evaluation criteria library is at the core of the framework. It reflects the evolving trends, technologies, and specific business demands within the oil and gas sector. The idea behind building this framework lies in helping decision-makers make data-driven decisions confidently. Thus, it was important to take a multifaceted approach and to ensure that the library covered the breadth and depth of various factors that would have an effect on the decision.

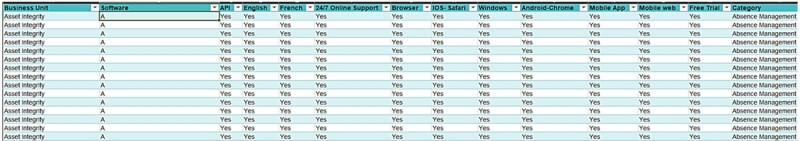

The core of the library is a single, structured, and all-inclusive master database (Fig. 1) built in a hierarchical manner. This list is built following a top-down approach, starting by identifying the most common and essential business units and software categories within the oil and gas industry.

To begin, software aggregation platforms such as Capterra and SourceForge were referenced to gain a comprehensive understanding of leading products, user feedback, and prevailing market trends. This initial market scan provided a valuable foundation for identifying leading solutions in the market across various business units.

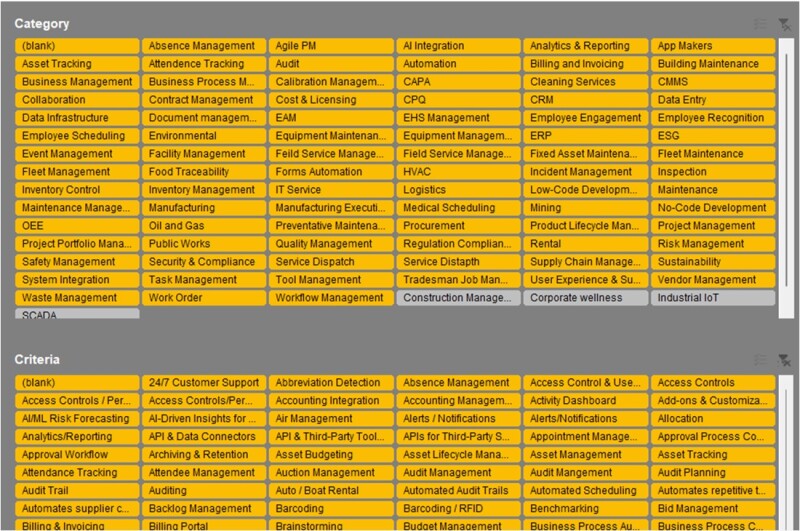

Extensive research from online sources, existing practices, and specific business requirements narrowed down hundreds of potential evaluation criteria (Fig. 2) for these systems, including the type of operating system, regulatory compliance, and application programming interfaces and connectors. These criteria were further categorized based on the specific functionalities they addressed, making it a comprehensive library.

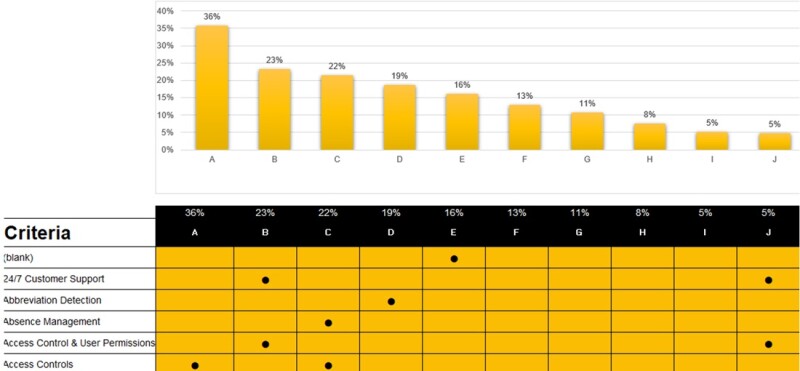

Furthermore, a tagging system (Fig. 2) was implemented to streamline the evaluation process and minimize redundant data entry. This system allowed users to quickly identify and filter criteria that were most relevant to their specific needs, providing a consistent and efficient means of comparing software solutions. By having tags to correspond with business function considerations, the system ensured that each software option is evaluated based on the same factors, which are directly relevant to the business requirements. This approach guaranteed that the final comparison was grounded in the practical realities of business goals (Fig. 3).

To validate this database, an agile approach was implemented. This iterative process allowed continual refinement and validation of the data based on the input from subject-matter experts and emerging industry insights. This helped in maintaining the reliability and accuracy of the library. The insights from subject-matter experts were helpful in narrowing down the criteria that aligned with business objectives. The development process of the evaluation criteria library specifically accomplished the following:

- Focused on the needs of each business unit to identify software priorities

- Ignored sponsored content and relied on feedback- and rating-based content

- Organized evaluation criteria into structured categories, prioritizing critical components relevant to the oil and gas industry while considering less urgent but context-specific factors

Surveys, forums, and interviews were conducted to mitigate the biases and understand the business’s key requirements. This not only facilitated the selection of key criteria for each solution under evaluation but also enabled decision-makers to confidently prioritize the success criteria and clearly understand the functionalities the business wanted in the new system.

Methodology

Fig. 4 shows the different steps organizations take when selecting software. There are two main parts of this process:

- Pre-Demo: Discovery and Strategic Foundations

- Requirements gathering and discovery

- RFI development based on discovered requirements

- Evaluation criteria design covering functional and technical aspects

- Development of demo scenarios for structured solution assessment

- Demo and Evaluation: Data Collection for Decision Enablement

- Systematic proposal review by decision-makers

- Demo sessions with standardized scenarios

- Quantitative scoring and normalization

- Analytics-based final evaluation

RFI responses are evaluated according to the established benchmarks. A checklist outlining the technical requirements and demo scenarios is shared with the shortlisted participants to ensure transparency and allow vendors to demonstrate their capabilities based on the specifications mentioned in the RFI. Checklists help quantify accuracy and compliance to rule out any vendors that do not meet the essential requirements. The development and distribution of standardized documentation to all the participants is a critical aspect of this methodology. This standardization serves multiple purposes. It ensures transparency in the evaluation process, allows software providers to demonstrate their capabilities based on consistent specifications mentioned in the RFI, and establishes clear expectations from the outset.

This emphasis on transparent and standardized communication is crucial for several reasons. First, it significantly reduces the need for back-and-forth clarifications, thereby shortening the overall selection timeline. Second, it ensures all participants receive identical information and opportunities to present their solutions, maintaining fairness in the evaluation process. Third, it helps build and maintain positive relationships with the software providers, which is vital for future partnerships and negotiations. Poor reputation management during the selection process can have long-lasting implications for an organization’s ability to secure favorable terms or maintain productive relationships in the future. The communication strategy used throughout the project was designed to maintain clear and consistent distribution of information to minimize the need for individual side-tracking responses.

The evaluation process ends in a systematic scoring approach where scores assigned by each decision-maker for every software provider are averaged and normalized to derive a composite score that mitigates potential biases. In the concluding stage, analytics derived from the scoring assist key stakeholders in assessing the best solution across various categories while highlighting each software option’s strengths and weaknesses.

This structured approach facilitates objective comparison and strengthens the organization’s reputation for professional and equitable treatment with software providers. Throughout the entire process, the framework maintains a delicate balance between rigorous evaluation and fair vendor treatment, recognizing that today’s nonselected software provider might be tomorrow’s crucial partner. This consideration shapes every aspect of the process, from initial communication to final selection, ensuring that all participants, regardless of the outcome, have positive experiences with the organization’s selection process.

Weighing Criteria

The success of software evaluation hinges on a clear, quantifiable scoring system. Our framework uses a straightforward scale for scoring software, where higher scores reflect stronger alignment with the specified criteria. Each criterion receives a weight coefficient based on its importance to the organization’s goals.

The final weighted score for each solution is calculated using the following formula:

Final Score = Σ (Criterion Score × Weight Coefficient)

This scoring methodology supports data-driven decisions based on quantifiable metrics while maintaining transparency in the selection process. The use of weighted criteria ensures that the final selection aligns with organizational priorities while maintaining the rigor of a quantitative evaluation process.

Practical Applications

Secure Waste Infrastructure has effectively used this framework to assess various greenhouse-gas data management software and incident management software. In both cases, the evaluation began from shortlisting the criteria from the library. Key stakeholders from the different business units convened to outline and narrow to just over 100 essential functions spanning nine distinct categories in each case, forming the foundational prerequisites for each type of software. In both cases, RFI packages were sent to the shortlisted solution providers, including the checklist, technical questionnaire, and the demo scenarios. These vendors then were invited to conduct the software demonstrations. In the subsequent debrief meetings, the evaluation scores were tallied in each case, leading to the identification of the best-fit solution.

This structured, quantitative assessment process has helped in aligning the business, field operations, and information technology organization priorities at Secure. The framework’s objective and data-driven approach presented an open and unbiased perspective of available solutions. Surveys, weighted factors, and numerical scoring enabled a complete vendor option analysis, making sure that all the stakeholders’ needs and concerns were considered.

The systematic process had a considerable influence on Secure’s internal stakeholders’ decision-making processes, thereby establishing trust in the outcome. This framework reduced bias, promoted transparency, and empowered the stakeholders to make informed choices based on data rather than personal opinions or inherent tendencies. The management and team leaders expressed increased confidence in the decision-making, thereby reducing the time to reach the outcome.

The major advantages of this approach are objectivity, cross-departmental collaboration, and transparency. With a data-oriented framework, organizations can make decisions consistent with short-term and long-term objectives, thereby enabling higher trust among stakeholders. Moreover, the framework’s adaptability means that it can be fitted to different decision-making scenarios, such as product and service analysis beyond the boundaries of information technology—for example, consulting services or health and safety equipment—thereby proving its applicability across multitude of projects.

Conclusion

This paper presented a methodical approach for selecting software tailored to the unique requirements of the oil and gas sector. This approach equips organizations with a dependable strategy for choosing software by prioritizing data-informed decision-making and unbiased assessment methods. Subsequent investigations may explore how to customize the framework for distinct subsectors within the industry and other industrial applications as well. The application of this framework can result in:

- Mitigated risks associated with software selection

- Better alignment with organizational goals

- Increased returns on technology investments

- Heightened user satisfaction with the chosen solutions

Any mention of specific software systems in this paper is purely for illustrative purposes and does not constitute endorsement or promotion of the entities mentioned.