This essay focuses mainly on reservoir and, to some degree, production and completion engineering. Hydrocarbon production from unconventional plays has characteristics that differentiate them from conventional plays. These characteristics are (a) contribution of completion design and implementation and (b) frac hits. These characteristics in shale wells are the results of very low permeability and highly naturally fractured rock fabric.

While the general approach of using artificial intelligence (AI) and machine learning (ML) in reservoir modeling is similar, the details associated with the conventional and unconventional plays determines the pathway that must be used in the application of AI and ML. For example, in conventional plays, the effect of completion design and implementation of a new well is not a major factor in the production of existing wells, while, in unconventional plays, the effect of completion design and the implementation of child wells have significant effect on the production from parent wells. This major issue requires a new approach to history matching of production from shale wells and must be addressed by the type of reservoir modeling approaches that are used by AI and ML.

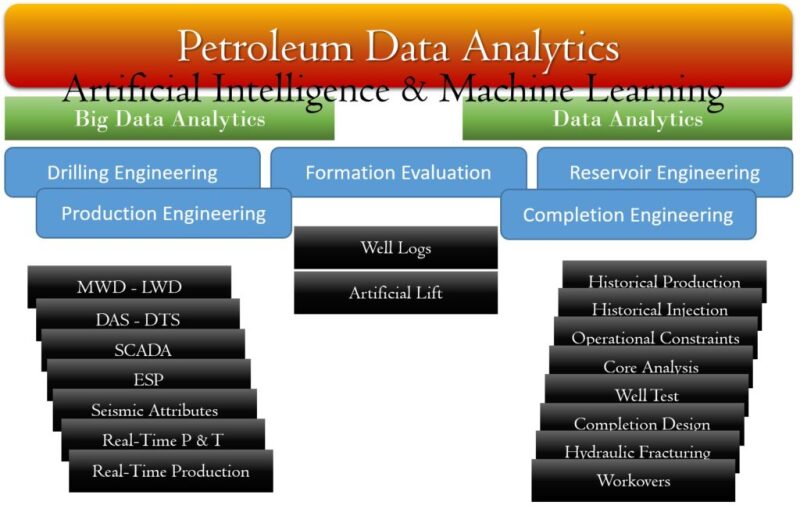

Another item that plays an important role in the success of application of AI and ML in reservoir, completion, and production modeling of conventional vs. unconventional plays is the type algorithms that contribute to big data vs. algorithms that do not require big data. This, to a certain degree, determines the contribution of unsupervised vs. supervise training for the model development. While reservoir modeling of conventional plays does not include big data, the potential of using big data in unconventional plays is much higher. Fig. 1 provides a summary of the use of big data analytics and data analytics in the context of petroleum data analytics.

is sensitive to the type of problems being addressed

based on the amount of data that is used

for analysis and predictive modeling purposes.

Using AI and ML in Modeling Conventional Reservoirs

AI and ML contribute to reservoir-engineering-related problems in conventional plays by allowing for the building of purely data-driven reservoir models for existing fields using multiscale field measurements. The correct versions of the AI- and ML-based reservoir models integrate all field measurements such as well trajectories; details of completions in multiple layers; well logs; core data; seismic attributes; pressure/volume/temperature; workovers; well stimulations; operational constraints such as choke setting, wellhead pressure, wellhead temperature, and artificial lift; as well as detailed injection and production history to build a comprehensive reservoir simulation model. The correct versions of the AI- and ML-based reservoir models are not hybrid models, rather purely data-driven. The history matching of such models is fully automated, and the history-matched model is validated in time and space using completely blind dynamic data from the field.

These models then are used for production forecasting, sensitivity analysis, uncertainty quantification, and production and recovery optimization through choke setting or infill location optimization and injection optimization, as well as many other field development planning processes through the practicality of millions of simulation runs in a short time period. Petroleum-data-analytics experts are the ones who are capable of developing the correct version of the AI- ML-based reservoir models that accomplish the objectives mentioned previously. (A future column will explain detailed characteristics of petroleum-data-analytics expertise.) These AI-based reservoir simulators are capable of modeling the physics of fluid flow through porous media through field measurements in order to avoid mathematical formulation of the physics (lately referred to as physics-based data-driven, physics-based AI, augmented AI, data-physics, or hybrid models, for example) that are the basis for the traditional approaches.

In conventional plays, hydraulic fracturing is not a common practice that would take place in every single well as part of the completion strategy. Furthermore, production from conventional plays does not require as many wells in a given field as it is required in the unconventional plays because of the tightness of the formation (low values of permeability). Therefore, in general, communication between wells in conventional plays has to do with the reservoir conductivity rather than results of completion design and implementation. In unconventional plays (shale), the short distances between laterals and the extensive hydraulic fracturing form the foundations of frac hits.

Using AI and ML in Modeling Unconventional Reservoirs

Conducting some research on the current application of AI and ML for production prediction or completion optimization for shale wells, one can conclude that the application of AI and ML in unconventional reservoirs can be divided into two categories. The first category includes those that concentrate only on production data, while the second category includes those that try to incorporate some (if not all) of the completion data, reservoir characteristics, and operational constraints.

As for the first approach (category), from a scientific point of view, not much can be said about those that only concentrate on production data. The reality is that, while they claim that they are using AI and ML as part of their technology at best are using traditional statistical algorithms. Their approach has absolutely nothing to do with the real essence of AI&ML. (I will be discussing the main difference between AI and ML with traditional statistical algorithms in another column.) These technologies are nothing but different examples of decline-curve analysis that have been around in our industry since early 1960s. What is funny is that some of them even believe that decline-curve analysis is a physics-based approach and others call decline-curve analysis “data analytics.” That should tell you volumes.

As for the second approach of applications of AI and ML for shale wells, productivity indices such as 30, 90, or 180 days of cumulative hydrocarbon production are used as output. While using such productivity indices provides an opportunity to model snap shots of production from shale wells realistically, they do not stand a chance to address well spacing and stacking, completion design, and operational constraints if and when frac hits start affecting production. Such productivity indices (even if they are used in a sequential manner to mimic a production profile) have proven to be insufficient for the prediction, management, and mitigation of frac hits.

More than 28 years of research and development in the application of AI and ML in the upstream oil and gas industry, about a decade of which has been focused on its application to unconventional plays such as shale wells, has resulted in the following conclusion: Prediction, management, and mitigation of frac hits require modeling the dynamics of individual wells in the context of the dynamics of the reservoir (communication between wells), also known as reservoir simulation and modeling. However, the problem is that, when it comes to shale, such dynamic simulation models cannot be developed using traditional numerical reservoir-simulation technology that is currently being used in our industry. One may ask why. The following section attempts to address the reasons.

Modeling the Dynamics of Shale Wells

The foundation of the traditional numerical reservoir-simulation technology is our current understanding of the physics of the fluid flow in the porous media. When this technology is applied to shale plays, given the state of our current understanding of the complex physics of fluid flow, completion, and production in shale wells, instead of being a scientifically accurate modeling technology, the traditional numerical reservoir simulation turns into a tool that is used to accommodate the assumptions, interpretations, biases, and preconceived notions of the practitioners.

The degree of simplifications and the vast number of assumptions that are included in the traditional numerical reservoir-simulation technology when it is applied to shale plays turns this originally scientific tool into a justification tool for the decisions that have already been made (before the modeling) by the practitioner. The major problem with the use of traditional numerical reservoir simulation in shale plays is that it can be used to justify completely contradictive decisions. In other words, no matter what is the nature of the decisions that are made and no matter how opposite of one another they may be (e.g., increasing vs. decreasing the stage length, small vs. large number of clusters per stage, smaller vs. larger amounts of proppant, fine vs. coarse proppant, high vs. low injection rates and pressure), the traditional numerical reservoir simulator can be designed in such a way to justify them all. This is because of the massive amount of simplifying assumptions that are involved in this modeling process that has nothing to do with the realities faced by the operators in the field.

The ideas presented here were first mentioned (using a different approach) in 2013 (1, 2). Besides all the well-known assumptions and simplifications that are included in the traditional numerical reservoir-simulation technology when this technology is applied to conventional plays, its application to shale plays brings about a new set of categories of gigantic assumptions and simplifications that completely compromise any and all applications of this technology to shale plays. Some of the assumptions and simplifications associated with the traditional numerical reservoir-simulation application in shale plays can be divided into the following three categories:

- Reservoir

- Naturally fractured reservoir

- Reservoir characteristics

- Petrophysical characteristics

- Rock/Fluid Characteristics

- Geomechanical characteristics

- Geophysical characteristics

- Completion

- Hydraulic-fracturing characteristics (e.g., fracture half length, fracture height, fracture width, fracture conductivity)

- Geometric distribution of the induced fractures

- Effect of reservoir characteristics, including the natural fracture distribution, on the geometric distribution of the induced fractures

- Effect of number of clusters, stage length, and pumping characteristics (injection pressure and injection rate) on the induced fractures

- Production

- Flowback characteristics

- Effect of the operational constraints (choke setting) on production behavior

- Which clusters or stages are contributing to hydrocarbon production

This list includes some of the assumptions and simplifications that are made in the application of traditional numerical reservoir-simulation technology in shale plays. Details of these assumptions and simplifications will be covered in a future column. Anyone who has used traditional numerical reservoir simulation to model fluid flow, completion, and production in shale plays can provide many details about these and many other assumptions that are involved in this modeling technology.

Possible Solution

The realistic solution to predict, manage, and mitigate frac hits is building a dynamic shale analytics model. Dynamic shale analytics must combine pattern-recognition capabilities of AI and ML with the domain knowledge related to reservoir, completion, and production engineering in order to address, manage, and mitigate frac hits. It must be noted that domain knowledge does not mean using mathematical formulations to model physics. It means making sure that the AI and ML algorithms that are incorporated in the dynamic reservoir-modeling process can be validated for honoring the known physical patterns without exact mathematical formulations.