The word “broadband” is used to sell a lot of what is new in offshore seismic. It can mean different things depending on who is speaking. But most often, it is applied to things used to gather scarce signals at the lowest frequencies.

Gathering more low-frequency data is the “holy grail’ in broadband, said Craig Beasley, chief geophysicist at WesternGeco, which is part of Schlumberger.

They are valuable for determining rock properties and imaging deep formations. Seemingly small gains can be big. “Moving down from “3 to 1.5 (Hz) does not sound like a lot. But in octaves, it is a whole other octave,” he said.

The appetite for these scarce signals has driven innovation is many forms, which reflect how marketing, science, and patent law combine to differentiate products in this competitive sector.

The methods used to maximize the low-end signals include varying the depth of the streamers, towing streamers at an angle, which also varies the receiver depths, and adding different types of sensors to receivers. All rely on processing to extract the maximum amount of usable signals, and some rely on processing alone.

The range of options exists because the goals, budgets, and evaluations of what works varies from job to job and operator to operator.

A primary target for broadband is known as the ghost notch. The notch refers to low-frequency signals lost as a result of destructive interference caused by reflected sounds known as ghosts. These are sound waves that have traveled up from the ground, and bounced off the surface of the water and back to the receiver.

Notches can be avoided if the surface reflections can be identified and removed during data processing. All the methods are designed to distinguish the up-going waves from down-going waves and then eliminate the ghosts.

“Directional awareness is used to differentiate what is coming up from the formation rather than reflected down from the water/air boundary,” said Malcom Lansley, a retired vice president of geophysics at Sercel, a seismic equipment maker. “If you can separate up-going and down-going wave field, you can attenuate surface ghosts.”

The latest and most sophisticated entries into the broadband battle are ones equipped with multiple sensors to track the direction of the incoming sound waves. The added data gathering capabilities create new classes of data offering mathematicians more opportunities to extract more information from a survey.

Multisensor seismic streamers are on a short list of technologies that could promise significant advances in seismic data quality compiled by Christopher Liner, president of the Society of Exploration Geophysicists. He calls it vector acoustics, which is a nod to the role of US Navy research in the early development of the idea. He sees it as one of the biggest opportunities for improved seismic imaging, because “it gives you fundamentally new leverage to build images.”

Data Choices

Multisensor systems are an emerging technology able to extract new classes of data, some of which may prove useful. Andrew Long, chief scientist of geoscience and engineering, imaging, and engineering for Petroleum Geo-Services, has developed a method to image relatively shallow features using a class of data normally eliminated as noise. It uses sea surface reflections—known as surface multiples—to better image relatively shallow features.

“When you start moving in the direction of imaging and characterizing the earth with surface multiples, facilitated by wave field separation, then things get interesting,” Long said.

Apache Corp. is considering the method to evaluate the shallow (700 m below the seafloor) Aviat gas field in the North Sea, which cannot be imaged well using conventional methods, said David Monk, worldwide director of geophysics for Apache. Broadband is a major battlefield in a brutally competitive arena. When comparing the options for eliminating ghost images, John Etgen, a distinguished advisor in seismic imaging at BP, chooses his words carefully like a father asked to name his favorite child.

“What I will say is there are strengths and weaknesses to each of their approaches. And when you go with a simpler system—fewer sensors—you can typically operate more easily and straightforwardly because you are not messing with fussy equipment as much,” he said.

“But the problem is you may have a more difficult fundamental problem to solve. With the complicated equipment, some of the fundamental aspects are easier, but it is much more complicated and expensive equipment,” Etgen said.

Apache has also tried many broadband offerings. “There must be at least a dozen,” Monk said, adding, “Some work well and others do not.”

Extreme Streamers

WesternGeco has taken this idea the furthest. Its IsoMetrix streamers have closely spaced lines of the usual hydrophones plus accelerometers are aimed horizontally and vertically. One other maker of streamers, Sercel, has followed Schlumberger’s lead and added accelerometers.

Petroleum Geo-Services was the first to create a multi-sensor streamer when it installed a geophone, which measures the velocity of vertical signals. Sercel and WesternGeco have accelerometers, which measure the change in the rate of speed of signals that are aimed vertically and horizontally, but the systems are engineered differently, Lansley said.

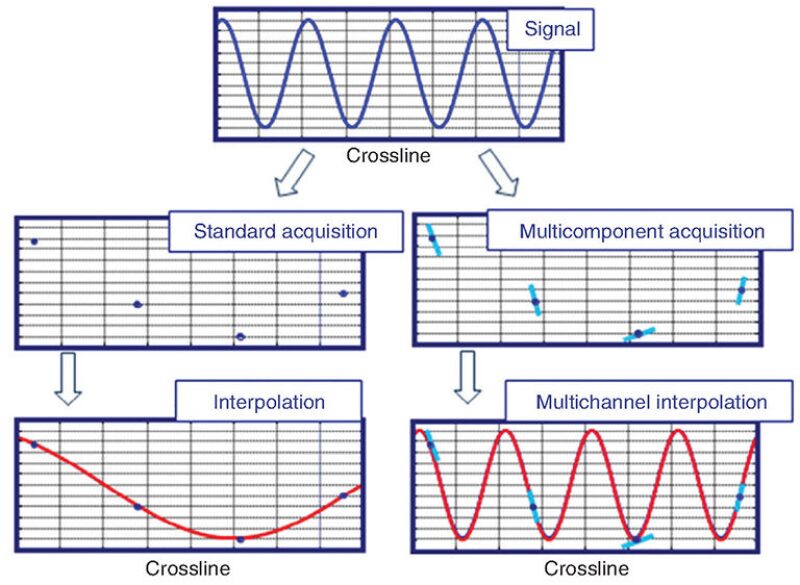

Accelerometers aimed horizontally observe the area in between the streamers to better observe the seismic wave field in the gap. Conventional systems use methods to predict—interpolate—changes in the wave field between the streamers. The accelerometers aimed between the streamers offer more data for constructing an accurate picture of a wave.

Apache is planning a second test of WesternGeco’s IsoMetrix system to see if “measuring change in particle acceleration horizontally can reconstruct the energy from the subsurface in a much better way,” Monk said.

Schlumberger has said that the quality of data gathered using IsoMetrix streamers spaced 75 m apart equals what could be done using conventional streamers spaced 25 m apart, or less. Delivering on that promise requires sophisticated filtering to remove unwanted sounds, such as the vibrations or ambient noise, that are “several magnitudes larger than the small (seismic) signal,” he said.

Conquering this challenge is one of the reasons that development of multi-sensor technology was the largest single engineering project ever undertaken by Schlumberger, said Beasley.

Advanced seismic surveys are most needed in producing fields where there is a lot of background noise.

Apache will be testing the IsoMetrix system on an upcoming survey in the North Sea. In a previous test, noise was a problem, Monk said. He has seen the results of more recent work and “we have seen some good data” which supports what he has heard from WesternGeco people that “they have figured that out.”