Real-time analysis of microseismic events using data gathered during hydraulic fracturing can give engineers critical feedback on whether a particular fracturing job has achieved its goal of increasing porosity and permeability and boosting stimulated reservoir volume (SRV).

Currently, no perfect way exists to understand clearly if a fracturing operation has had the intended effect. Engineers collect data, but the methods used to gather it, manually sort it, and analyze it provide an inconclusive picture of what really is happening underground.

Daniel Stephen Wamriew, a PhD candidate at the Skolkovo Institute of Science and Technology (Skoltech) in Moscow, said he believes this can change with advances in artificial intelligence and machine learning that can enhance accuracy in determining the location of a microseismic event while obtaining stable source mechanism solutions, all in real time.

Wamriew presented his research at the 2020 SPE Russian Petroleum Technology Conference in Moscow in October in paper SPE 201925, “Deep Neural Network for Real-Time Location and Moment Tensor Inversion of Borehole Microseismic Events Induced by Hydraulic Fracturing.”

The paper’s coauthors included Marwan Charara, Aramco Research Center, and Evgenii Maltsev, Skolkovo Institute of Science and Technology.

Skoltech is a private institute established in 2011 as part of a multiyear partnership with the Massachusetts Institute of Technology.

“People in the field mainly want to know if they created more fractures and if the fractures are connected,” Wamriew explained in a recent interview with JPT. “So, we need to know where exactly the fractures are, and we need to know the orientation (the source mechanism).”

It Starts With Data

“Usually, when you do hydraulic fracturing, a lot of data comes in,” Wamriew said. “It is not easy to analyze this data manually because you have to choose what part of the data you deal with, and, in doing that, you might leave out some necessary data that the human eye has missed.”

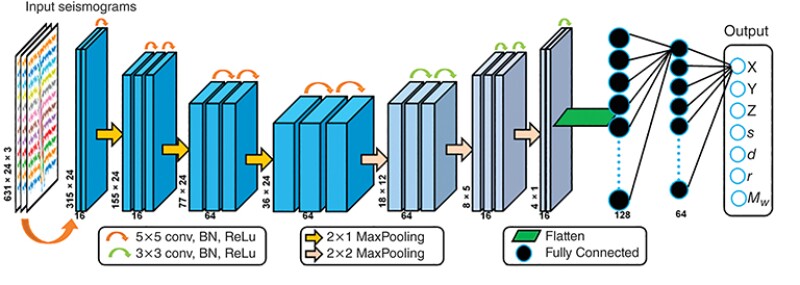

To solve this problem, Wamriew proposes feeding microseismic data gathered during a fracturing job into a convolutional neural network (CNN) that he is constructing (Fig. 1). Humans discard nothing. Wave signals from actual events along with noise of all kinds goes into a machine, and the CNN delivers valuable information to reservoir engineers who want to understand the likely SRV.

Companies today can identify the location of microseismic events, even without the help of artificial intelligence—though the techniques are always open to refinement—but analyzing the orientation (and hence their understanding of whether and how the fractures are connected) is a difficult and often expensive task that is usually left undone.

“Current source mechanism solutions are largely inconsistent,” Wamriew said. “One scientist collects data and performs the moment tensor inversion, and another does the same and gets different results, even if they both use the same algorithm. When we handle data manually, we choose the process, and, in doing so, we introduce errors at every step because we are truncating, rounding up, and rounding down. We end up with something far from reality.”

By using a neural network, “we can test our algorithm many times and we verify that it is giving us the same thing,” Wamriew said. “A neural network is just a smart algorithm.”

Expenses play a role in deciding whether to identify source mechanisms because, to collect reliable data, geophones or fiber-optic cables must be suspended down a borehole during the fracturing operation. Companies might do this if they have abandoned or exploration wells nearby, but none will incur the expense of drilling a well only for this purpose.

So, they collect data from geophones strung across the surface while the fracturing is taking place 2 or 3 km below. Wamriew explained the problem: “Microseismic events give off very weak signals, and so you will be recording more noise than data. You get the noise of trucks moving around, noise from the well, and it becomes very difficult to distinguish between noise and signal. When you have low-quality data (i.e., data with low signal-to-noise ratio), you cannot determine the source mechanisms.”

The source mechanisms of the located events, which provide information about the magnitudes, modes, and orientations of the fractures, are obtained through moment tensor inversion of the recorded waveforms.

Wamriew supports an industry practice of sometimes cementing fiber-optic cable along well casing in order to collect data close to the source continually as rocks twist and slip to open new channels through which hydrocarbons can flow as a result of fracturing operations. It is also worth noting that microseismic monitoring can also be carried out to investigate casing integrity.

Through downhole sensors, microseismic data is acquired closer to the source of the fracture and along vertical as well as horizontal vectors. The nature of the geologic medium through which a particular wave travels is also clearer.

But even that data will be noisy, so, ultimately, it takes a well-trained neural network to provide the information that companies need to fine-tune the effectiveness of fracturing operations most efficiently and raise the SRV.

Machine Training With Big Data

In creating his deep CNN algorithm, Wamriew focused his research on developing a training data set of synthetic microseismic events with known locations and source mechanisms.

“The main advantage of the neural network approach is that expensive forward modeling operations are only done at the beginning to generate the training set,” he writes in his paper. “Subsequently, the trained neural network can then predict locations on new events rapidly, making the approach appealing for large sets of microseismic events or real-time applications. It is also straightforward to include prior information on model uncertainties, for example in the velocity model or source mechanisms, by sampling from the prior distribution when generating the training data set.”

Wamriew starts with the geological model of a shale field, and, from that model, he uses the laws of physics to generate synthetic microseismic data. He performed 20 experiments, generating a total of 40,000 microseismic events. In each experiment, he assumed five fracture points separated by 100 m along a horizontal well and, for each fracture point, he generates 400 microseismic events. The events are randomly distributed within a 3D cube of 500 m centered about a vertical monitoring well. Each event produces waves, which appear as subtle ripples on seismograms. Noise is added, and the seismograms become a training data set to teach the neural network how to recognize various events.

Wamriew’s goal is to develop a novel algorithm for processing microseismic data based on deep learning by creating training seismograms that model 40,000 microseismic events within the cube, a number he thinks would cover any imaginable situation. He is looking forward to validating his CNN-based algorithm using real field data from well-known projects and recently acquired data to begin this part of his research from the Frontier Observatory for Research in Geothermal Energy (FORGE) in Milford, Utah.

The US Department of Energy funds the FORGE project to assist scientists and engineers in testing and developing breakthroughs in enhanced geothermal systems technologies and techniques.

Wamriew himself has a professional background in geothermal energy, which uses the analysis of microseismic events to understand the dynamics of a geothermal reservoir much the same as that of oil or gas fields composed of shale or other low-permeability rock.

In addition to using data from FORGE, Wamriew is negotiating to obtain geophysical data from researchers in Australia who have been collecting large quantities of it on naturally occurring microseismic events. The researchers are using geophones and fiber-optic cables and then cross-analyzing results achieved between the two acquisition systems.

“In forward modeling, we solve a well-poised forward problem by calculation of the seismic response for a specified model whose elastic parameters are predefined,” Wamriew said.

In reality, the 40,000 events with which Wamriew began could occur only in “a really big field,” as he puts it. “Normally, you would not find microseismic like this, but we do this because we want to train our neural network so it will become clever enough to identify any event that might happen,” he said. “A neural network is just a clever algorithm that is organized in a computer to mimic a human brain.”

Data Set Preparation

“One of the advantages of using CNNs is the fact that they do not require extensive data preprocessing, since the filters, through convolution operations, are able to learn even complex features in the given data set,” Wamriew wrote in his paper. “This is invaluable as it preserves the integrity of the data, since each step in data processing introduces uncertainty. Thus, we performed minimal preprocessing during the data preparation.

“Two sets of data were prepared; one set for the location of microseismic events and the other set for moment tensor inversion. The data sets were prepared from the 40,000 seismograms obtained by forward modeling (discussed in the previous section). Each seismogram has a length of 631 sample steps and contains 24 traces, each from a channel of a single receiver. Hence, each seismogram is a tensor of dimensions 631×24×3. This forms a perfect candidate for convolutional neural networks because the seismograms can be treated as any 2D RGB images with the third dimension, in this case the component corresponding to the depth of the image.

“For each seismogram, we performed mean normalization by obtaining the mean amplitude of traces and subtracting it from the individual trace amplitudes and then dividing the difference by the absolute maximum amplitude minus the minimum amplitude. This is necessary to enhance the performance (convergence) of the neural network algorithm. The labels for the location data set were the three spatial coordinates (x, y, and z) of the hypocenter of the microseismic events while, for the moment tensor inversion data set, the labels were the strike, dip, rake, and moment magnitudes of the events.

“Having prepared both the features and label data sets for the location of the events and moment tensor inversion, we split the data sets into two parts for training and validation and testing purposes. In doing so, 90% of the seismograms were set aside for training and validation of the model while the remaining 10% were reserved for testing.”