Among the many buzzy, digitally related words or terms bandied about the industry over the past year or two, “digital twin” serves as something of a confluence of them all.

Populating many industry conference agendas are high-level presentations and discussions with descriptors such as digitization, digitalization, digital transformation, and the digital disruption, which involve big data, data analytics, advanced analytics, artificial intelligence (AI), machine learning, automation, the Internet of Things (IoT), and the ever-important, abundantly abstract cloud. Some of those terms are used rather broadly and interchangeably, leading many to wonder: What exactly are we talking about here?

The definition of a digital twin is similarly less finite, but it is rather easy to conceptualize at a basic level. The technology links the physical world with the digital world, providing a digital model of a physical asset or process. It serves as a real-time data hub for its owner, allowing for reference to designed or expected performance and continuous offsite monitoring.

Roots of the idea can be traced back to a similarly challenging sector that is tasked with exploring and operating in harsh environments normally inaccessible by humans. Successful space travel requires complex modeling and simulations, which NASA for decades has employed from its field centers on the ground. Michael Grieves, currently executive director of Florida Institute of Technology, brought the term to the fore during the early 2000s while working in the University of Michigan’s college of engineering, but he credits the coinage to his one-time NASA colleague and technologist John Vickers.

Multinational conglomerates such as GE and Siemens have deployed the twin in everything from jet engines to power plants. The architectural engineering and automotive manufacturing industries are also mature in their use of digital twin. The digital twin in the building industry is referred to as the building information model.

Traditionally slow on the uptake when it comes to implementing new technologies, the oil and gas industry is beginning to put the twin to work on its equipment, facilities, and wells with the promise of more efficient engineering, procurement, construction (EPC), and operations. The virtual hub is envisioned as a way of breaking down silos between work phases, operators and contractors, and the different industry disciplines—such as geophysics and reservoir engineering—required to carry out upstream projects.

Bruce Bailie, digital officer, oil and gas Americas at Siemens, said when he first mentioned the term “digital twin” to an operator last year, he was asked not to use it again “because it’s too confusing. It means something different to every person who uses it.” They suggested he instead “talk about the functionality of what you’re providing and not ‘digital twin.’”

For Siemens, “When we refer to our digital twin, we’re talking about creating a reference of the asset which is accurate and maintained throughout the life cycle,” Bailie said. Until recently, he explained, there had not been a single source of knowledge or “truth” for an asset that incorporates all of its components and processes.

Digital twin is “building up all the engineering, knowledge, and behavior of the asset from concept, front-end engineering design through design, engineering, fabrication, construction, commissioning, operations, and maintenance,” meaning the twin isn’t formed all at once, nor is it a final product, he said. “So it’s not an event—the digital twin is actually created through the project life cycle.”

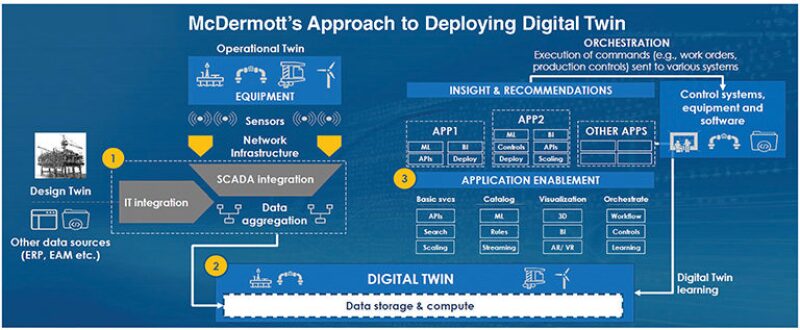

The offshore EPC contractor leverages data from the

existing systems on a platform—such as SCADA or

PLC—while looking at edge-computing-enabled

sensors on facilities where there are bandwidth

limitations.

Twin Functions

On a broad level, Siemens divides its twin model into two categories: the plant twin and the process twin. The plant twin is the 3D or physical model of the asset, while the process twin refers to the modeled behavior or performance of the asset.

To further illustrate the technology’s function, Siemens also breaks down the twin into three levels: the overarching plant, its systems, and the equipment making up those systems. “The reason to consider the twin at these three levels is that the degree of detail or accuracy required at each level is significantly different,” Bailie explained.

The equipment-level twin includes detailed engineering and manufacturing data for equipment design, which is typically maintained in product life cycle management (PLM) software. Equipment combined as a functioning unit make up the system-level twin, such as a power generation module consisting of a gas turbine and generator. “The twin at this level needs to accurately represent the combined operation of all the equipment in the module, but this is less rigorous than the accuracy required to design the individual products,” he said.

Multiple systems are combined into the plant-level twin, which provides a model of the overall plant performance. This enables optimization of production as well as operator training and spatial navigation to all of the design and operational data.

Because the lower-level models or components are used for detailed studies or analysis, the plantwide twin does not require modeling “at the same fidelity and accuracy,” Bailie said. “You’re not trying to design the pump impeller; that’s already been done. All you need at the plant level is to know, for that amount of horsepower and pressure and viscosity of the fluid, I’m going to get the actual and designed performance that’s required,” he said.

The process twin allows for engineering simulations and automation system testing. While “engineering models have been around forever,” all studies during the design phase in the past “were independent and discrete,” meaning the mechanical design of a piece of equipment was done completely independent of process modeling, Bailie said. Digital twin ties those two models together.

“On a high-fidelity twin, you can even do pretuning of your controllers before commissioning. In fact, we had one project in the North Sea where, when we did commissioning, there were only a couple of [control] loops that had to be retuned in a live plant because they were all tuned prior to the plant actually being built,” he said.

Kishore Sundararajan, president of engineering and product management, oilfield services at GE’s Baker Hughes (BHGE), describes the physics model—as opposed to the physical model—as engineering models used during design, validation, and failure modes and effects analysis, among other functions. Before on-site installation, “this model is parameterized to match the ‘as installed’ machine and becomes the digital twin that follows the life cycle of the machine.”

During operations, it accounts for the machine’s operating conditions and health status, serving as the basis for delivering descriptive, prescriptive, and predictive analytics, he explained. Descriptive analytics offer a notification of an event of status. Prescriptive analytics provide a notification plus an automated action such as issuing a work order for repair and maintenance. Predictive analytics offer a prediction of the asset’s remaining life, enabling proactive planning and action before failure.

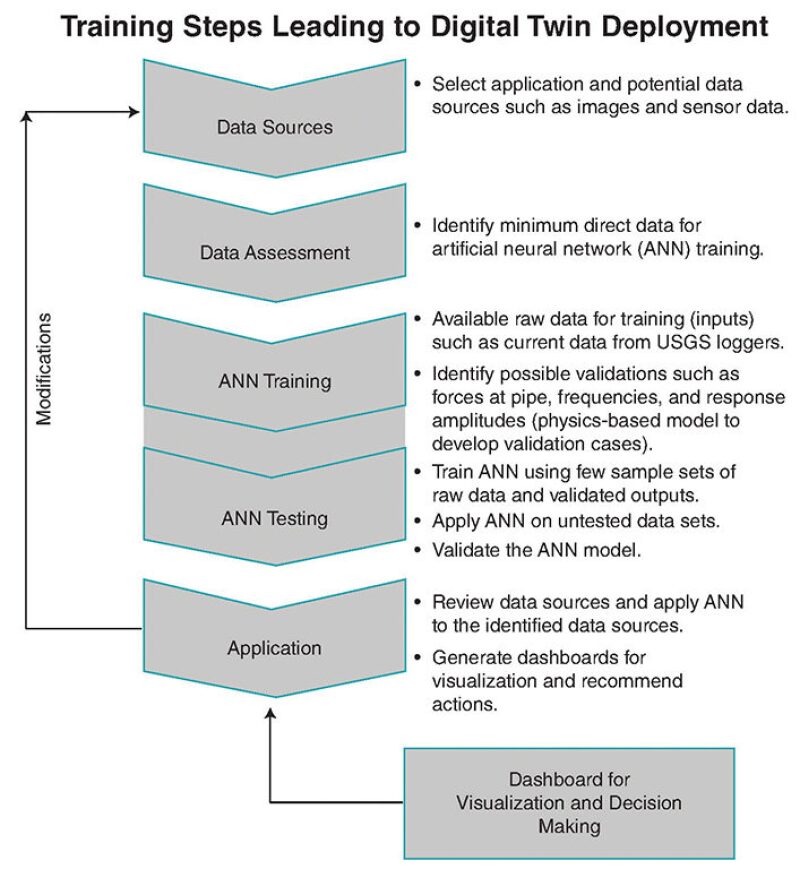

With the digital twin—as in any design process—it is

ideal to plan the type of algorithm based on potential

data sources and have the model trained and tested

prior to deployment.

What Feeds a Digital Twin?

The digital twin is made up of data “typically collected from a variety of sources such as sensors, control systems, historians, weather data, and more,” Sundararajan explained. “All this data is collected in a data lake in the cloud. The data is cleansed, verified for quality, and then fed to the physics models for processing, interpretation, and to provide insights. The sensors, control systems, and historians are typically hardware in the plant site, and all the rest is software.”

Offshore EPC contractor McDermott International leverages data from existing systems on a platform—such as supervisory control and data acquisition (SCADA) and programmable logic controllers (PLC)—“while looking at edge computing-enabled sensors on facilities where there are bandwidth limitations,” said Vaseem Khan, the company’s vice president of engineering. “We’re in discussions with multiple partners in order to enable the data collection, aggregation, storage, and visual analysis” for the twin, Khan noted.

McDermott’s digital twin is based on its Gemini XD software platform, which is named after the twin constellation and NASA’s Gemini space program, the precursor to the Apollo program. It’s developed in house in partnership with French software firm Dassault Systemes. “So what we are doing is we’re taking the technology that’s used in the automotive and the aerospace industries” that use PLM for repetitive manufacturing, “and we’re modifying it to use in our more-bespoke project environment.”

Partha Sharma, chief architect of DNV GL’s AVATAR Digital Twin, said the twin has existed at his company since it developed its first finite element (FE) programs in the 1970s. At that time, the digital twin consisted of structural FE models, which were primarily used in the design phase.

“Technology has since evolved and we now use a combination of both traditional physics-based FE models and data-driven machine learning models for all phases of the asset life cycle: design, fabrication, installation, and operations,” he explained. “These models are digital replicates of the physical asset and can be used for condition assessment of a variety of assets including hulls, moorings, risers, subsea pipelines, topside piping, and equipment” such as pumps and compressors. “The digital twin is supported by a variety of models for damage mechanisms such as fatigue, fracture, corrosion, and erosion—to name a few,” he said.

In support of this, more and more data-driven machine-learning models are being applied as part of the digital twin. “Several methods exist for creating digital twin using machine learning,” which may involve supervised learning or unsupervised learning, Sharma said. An artificial neural network (ANN) is an example of supervised learning that is considered a reliable and efficient method of creating a twin.

“Recently, we completed a project for a major operator where we demonstrated the application of ANN for computing fatigue of steel catenary risers,” he said. “The method is extremely efficient and accurate.”

Development of digital twin is dependent on the application and the data sources available to the operator, Sharma emphasized. The many sources of data comprising twin models include survey data, aerial imagery, sensor data, public sources such as Google images, flow velocities measurements, GIS databases, physics-based models, experimental test data, and inspection sources from site visits.

Some of the data may be unstructured, such as PDFs; structured, such as Excel spreadsheets; or semi-structured, such as log files from an operator’s integrity management program. “Data derived from these sources may be used for building specialized algorithms and will be applicable for those types of data inputs,” he said. “To account for several data sources or several failure mechanisms or components, these could be connected to an all-encompassing digital twin package.”

BP Leading Digital Twin Adoption

Among operators, BP has been an “aggressive” and “early adopter” of digital twin and accompanying technologies, said Kishore Sundararajan, president of engineering and product management, oilfield services at GE’s Baker Hughes (BHGE). “From a cultural adaptation perspective, they are the furthest ahead,” which is being driven by Bernard Looney, BP’s upstream chief executive, he said.

BP recently announced contracts with McDermott International and BHGE for use of the twin on major offshore gas projects in different parts of the world: the Tortue-Ahmeyim field development off Mauritania and Senegal and Cassia C project off Trinidad and Tobago.

McDermott in March was awarded a detailed engineering and long-lead procurement services contract for the Cassia C compression platform, 65 km off the southeast coast of Trinidad and Tobago. The project includes a new unmanned compression platform for the existing Cassia complex. The facility will provide gas compression to the Cassia complex via a new bridge connected to Cassia B.

Also in March, McDermott and BHGE were selected for front-end engineering design studies in advance of an engineering, procurement, construction, and installation contract for Tortue-Ahmeyim, which lies on the maritime border of Mauritania and Senegal. McDermott will work on defining the subsea umbilicals, risers, and flowlines (SURF) scope for the project, while BHGE will focus on the subsea production system scope.

“BP is actually one of our strongest supporters in this initiative,” as it sees the collaboration aspect of digital twin as most valuable,” said Vaseem Khan, McDermott vice president of engineering. The contractor plans to develop a twin of both complete systems. “The scope on these projects is currently being defined,” Khan said. “At present, the Tortue SURF system will have a digital twin with McDermott services provided post-handover. We have ongoing discussions with BP to further define that digital twin.”

BP has also enlisted BHGE to help it optimize production from its 200,000-B/D, 180-MMcf/D Atlantis platform in the Gulf of Mexico via BHGE’s plant operations advisor (POA) software, which integrates operational data from producing oil and gas facilities to deliver notifications and analytical reports to engineers so they can identify performance issues before they become big problems. This is intended to reduce unplanned downtime and improve facility reliability.

Using GE’s Predix operating system and asset performance management (APM) capabilities, POA combines big data, cloud hosting, and analytics on both individual pieces of equipment and the production system as a whole. The system provides simplified access to a variety of live data feeds and has visualization capabilities including a real-time facility threat display, BHGE said. It also incorporates case management capabilities to support learnings from prior operational issues.

In addition to its use on Atlantis, POA is undergoing field trials on three other platforms. BHGE intends to offer the technology as an APM option for the industry.

Improved Design-to-Commissioning Work

“Our industry over the past 10-odd years has seen huge inflation, and one of the causes of inflation is that every time we do something, we do it differently,” said Khan, who believes the issue can be resolved in large part by standardization through the twin. “There are a number of initiatives that we are running to standardize” at McDermott, he said. “With the digital twin, we can take components of what we have built—digital components—and then reuse them. So instead of custom-building [an offshore] platform, we will, because everything has been digitized, take parts of platforms we’ve built previously and integrate them into a new platform, almost like we are modularizing the design of a platform.”

McDermott over the past decade has designed more than 100 jackets for the Middle East market, where it previously would design each jacket “from scratch, do all the analysis work, and take about 9 months” before starting fabrication, he said. “Now that we have digitized this process, we have the ability of going into our digital database, inputting parameters relevant to the new jacket—water depth, topside loading, salt conditions—and it will give us a digital model of the closest jacket to the one we need. That model can then be tweaked, and we can start fabricating a jacket” 3–4 months into the cycle instead of 9 months, which ultimately reduces the time before installation in the field.

Additionally, the real-time collaboration that is facilitated by digital twin “will reduce what we call the cost of non-quality” during the EPC project life cycle, providing fail-safe mechanisms during procurement and construction, said Khan.

“Let’s say we bought a 6-in. valve. As part of engineering we then determine that this 6-in. valve should [instead] be a 4-in. valve—and we’re determining it very early in the life cycle of the valve. We tell the valve supplier to please change the valve [to 4 in.], and the valve supplier changes it. But the information doesn’t go to the piping people, and they are still buying a 6-in. flange instead of a 4-in. flange. So when everything shows up in the yard for fabrication, and we’re doing the spool, we’ve got the wrong-sized flange.” The correct size is missing “because right now this information is communicated by mocking up drawings, by writing notes on Post-it pads, or by sending emails,” he explained.

Using Gemini XD, “this information is communicated in real time digitally. So the 3D model, which is the center of the digital twin, will start flashing red indicating that the valve has changed and you need to change the flange as well. And it will require someone to take positive action,” he said. This will result in “cost savings, which we will pass on to our client. We will take lower contingency on rework, and there will also be, in terms of commissioning, more completeness of information when we hand over a facility. So when we hand over the digital twin to our client, we’re not handing over container loads of documentation. Everything about that facility—our design data, vendor design data, quality data, inspection records, welding, X-rays, everything—is embedded into the digital twin.”

McDermott is currently building twins during concept to commissioning, but its “ultimate aim is to take this to Phase 2” where it integrates the digital twin with the operator’s enterprise asset management system—such as SAP or IBM Maximo—during operations “so that the client has a single portal into their facilities,” Khan said. “If they want to do maintenance work, they will first simulate maintenance work on the digital twin. If they need records of how to change the seal in a pump … that is sitting in the digital twin. Someone will put on their Google Glasses, they’ll go into the digital twin, they’ll access the records, and they’ll practice changing the seal on the pump. That’s the ultimate aim. That’s where I think our industry will go. ”

So when routine work is needed offshore, “you won’t need 40 or 50 people working in the Gulf of Mexico on 2-week rotations. All of that will be handled [onshore] because the digital twin is giving you the exact same insight that you would have if you were physically on the platform,” Khan said.

More-Efficient Operations

During operations, digital twin enables an end user to continuously improve performance by predicting degradation of equipment and assets, Sharma said. “Armed with this information, the operator can adopt a data-driven preventive maintenance program that can reduce downtime and save operational costs. We may also be able to use the digital twin for asset-life extension by preventing premature replacement of equipment. For example, a new riser may cost millions of dollars to fabricate and install, and if we can use the existing risers beyond the original design life by using actual measured fatigue data and demonstrate fitness for service, tremendous cost savings can be achieved while maintaining the safety level.”

In addition to creating the twin to predict fatigue failure of riser systems, DNV has used the method to predict the failure of mooring lines and erosion in subsea equipment. The company is also now creating a digital twin for predicting pipeline vibration issues induced by the flow of fluid. For each of these examples, “the digital twin model is tuned based on project-specific conditions and data sources available to the operator,” and ANN algorithms were developed for machine learning, Sharma said.

Bailie said Siemens has used its COMOS software in operations and maintenance, including COMOS Walkinside, one aspect of the digital twin. “When you look at a spatial (3D) twin, we have used our Walkinside application for training operators in areas such as spatial awareness.”

In the area of collision detection, Siemens, through its plant twin, is performing motion studies that, for example, determine whether there is sufficient space around equipment on an offshore platform to allow for the most efficient emergency evacuation. Training using Siemens’ immersive training simulator has been performed on Total’s Pazflor floating production, storage, and offloading (FPSO) vessel off Angola—minus the actual FPSO—using an avatar within a 3D model to provide the immersive experience for the operator. Bailie added that Siemens’ engineering and monitoring twins “remain active and integrated to operations and maintenance.” This includes AkerBP’s Ivar Aasen platform in the North Sea, which is using the technology to support onshore remote operations.

Sundararajan said BHGE is deploying digital twin to improve drilling efficiency by optimizing the rate of penetration and better managing the drillstring, the bit, the bottomhole assembly, and fluids and vibration, along with parameters for the rig including weight on bit, torque, horsepower, and revolutions per minute. Digital twins are also proving to be useful during well operations. In a presentation last year, the firm showcased its use of AI and deep learning—and thus the twin—as part of an artificial lift system operating in an unconventional well in the Mississippian Lime play of Oklahoma in 2016.

In the example, the twin recognized that scale buildup in the well at 6,000 ft would reach a critical point 3 months down the road, resulting in a 20% decline in production without measures being taken. Using current and historical data from that well along with current and historical production and cost data from similar wells, it ran 540 simulations to find an optimal range of solutions. It provided two remediation options based on effectiveness with 31 offset wells over the previous 6 months: either increase the dosage volume of scale inhibitor or schedule a workover with chemical batch treatment.

Integrating financial information, it predicted that, over the next 3 years, net present value (NPV) for Option 1 would be $1.8 million and the cost for continuous chemical injection would be $27,000/year, while NPV for Option 2 would be $1.5 million using intermittent batch chemical treatment at $20,000/year. Because Option 1 presented a lower risk to production, however, it was selected by the user despite costing slightly more. The twin carried out the command through GE’s upstream asset performance management software.

Growing Pains

As digital twin is still in its early stages of use in the oil and gas industry, companies are still figuring out how to best harness the multifaceted technology. Bailie noted that upstream projects involve different contractors, vendors, and tools, so “interoperability and providing information from one digital environment to another to create your holistic twin becomes difficult.”

Khan believes change management is the biggest challenge. “We’re doing things differently. We’re doing things in a way which the industry is not necessarily familiar with. The level of collaboration and transparency that the digital twin produces has not been seen in the industry. So we are doing a lump-sum project as if it were a purely reimbursable project, and that is a challenge to both our team and the client’s team.”

Sundararajan has noticed an element of personnel continuing to trust the judgment of humans—their colleagues—over what the model is telling them. This is because they had previously been conditioned to do so and have operated that way for years. On the other hand, some people are inclined to purely trust the model. Somewhere in the middle is optimal, Sundararajan said. He also noted the common challenge of finding a focus for a newly adopted technology. “The pain point early in digital twin is, everything is possible and it’s really hard pick the three things that you could be doing to move the needle vs. the 500 things we want to do,” he said.

Within BHGE, use of digital twin has become more refined because the cost, time, and engineering effort to create one for an entire machine “doesn’t pay off,” Sundararajan said. “You then start focusing on things that matter, and you make a digital twin of that part in high fidelity, and the rest of that machine doesn’t have to be as high fidelity.” BHGE focuses on a part within a machine that’s more likely to fail than others.

In DNV’s experience, successful client engagement and final deployment of the twin were only possible after giving the client an initial draft minimum viable product (MVP), similar to a first draft of the product that has the required features to demonstrate its usefulness. But Sharma noted that “it is impossible to create drafts for all applications” in the twin to allow a client to see its full potential. Currently, twin models are “limited to modifications of certain MVPs.” He said “an all-encompassing digital twin will bear fruit with more MVPs, client experience, and feedback.”